Quality assessment of machine-translated post-edited subtitles: an analysis of Brazilian translators’ perceptions

Arlene Koglin

Federal University of Pernambuco

Federal University of Santa Catarina

https://orcid.org/0000-0003-0711-0811

Willian Henrique Cândido Moura

Federal University of Santa Catarina

willianmoura.tradutor@gmail.com

https://orcid.org/0000-0002-2675-6880

Morgana Aparecida de Matos

Federal University of Santa Catarina

https://orcid.org/0000-0001-5009-7638

João Gabriel Pereira da Silveira

Federal University of Santa Catarina

https://orcid.org/0000-0003-3304-1768

Abstract

This study focuses on the translation product in the form of subtitles and, in particular, investigates the perceived quality of machine-translated post-edited interlingual subtitles. Basing this study on data collected from Brazilian professional translators, we analyse whether the use of machine translation has an impact on the perceived quality and acceptability of interlingual subtitles. We also examine those technical parameters and linguistic aspects that the study participants considered troublesome and whether translators would report any evidence that the subtitles were machine-translated. Sixty-eight Brazilian translators volunteered to participate in this study. They completed a questionnaire, watched a movie trailer with post-edited subtitles, and then assessed the quality of the subtitles by rating them using a Likert-type scale. Finally, the participants responded to a written verbal protocol. Our analysis relies on the qualitative and quantitative data provided by the participants. The qualitative and quantitative data from the protocol answers were triangulated with the quantitative results from the Likert-type scale. IBM SPSS Statistics was used for statistical analysis. Our findings show that 28.1% of the translators assessed the post-edited subtitles as very satisfactory whereas 48.4% judged them as satisfactory. These results seem to be an indication of the acceptability of post-editing machine-translated subtitles as they find support in the written verbal protocol answers. Regarding the technical parameters, 43% of the participants reported no issues whereas 22.8% commented on the font colour and the font size. When asked about any linguistic aspects that could have disturbed the viewing experience, 64.1% of them reported that no linguistic issues were evident in the subtitles. A small percentage of the participants reported some linguistic problems, such as a lack of accuracy, the use of literal translation, and unnatural subtitles. Despite this apparent linguistic evidence of machine translation, no participant explicitly affirmed that the subtitles were machine-translated and post-edited.

Keywords: subtitling, machine translation, post-editing, audiovisual translation, acceptability, translation product

1. Introduction

Machine translation post-editing (MTPE) as applied to audiovisual translation (AVT) has gradually been introduced into the translation industry (AVTE, 2021; Georgakopoulou, 2019; Georgakopoulou & Bywood, 2014). In the context of subtitling, some of the reasons for the use of machine translation (MT) are the growing demand for this type of AVT combined with the short amount of time provided for it (Burchardt et al., 2016; Díaz Cintas, 2013) and the advances in translation technology tools (Athanasiadi, 2017; Bywood, 2020; Díaz Cintas & Massidda, 2020).

The use of MTPE in subtitling has had a significant impact on subtitling workflows and its effects go beyond technological improvements (AVTE, 2021). It has also affected the AVT market by changing the way subtitlers work, generating resistance from some professionals regarding the use of this technology due to the decrease in their earnings, changes in their workflows, and the low quality of the subtitling output (Castilho, 2011; Szarkowska et al., 2021).

In the academic field, the number of investigations into subtitling and MT has increased (Karakanta, 2022; Volk, 2008), mainly to assess the quality of subtitle translation (Brendel & Vela, 2022; Doherty & Kruger, 2018; Koglin et al., 2022) and to evaluate whether MT is feasible and able to help the translators to work more efficiently and with increased productivity (Castilho, 2011; Etchegoyhen et al., 2014; Karakanta et al., 2022; Koponen et al., 2020; Matusov et al., 2019).

In the AVT context, the notion of quality may vary according to the needs and expectations of the stakeholders involved in the production and consumption of translated audiovisual products (Szarkowska et al., 2021). On the one hand, translation scholars may criticize and question the adequacy of some translated programmes that, on the other hand, translators and media companies consider adequate. Similarly, viewers can be highly critical of the quality of subtitled audiovisual products due to the inherent vulnerability of subtitling (Baños Piñero & Díaz Cintas, 2015; Koglin, 2008) or the variation in their expectations of quality.

In this sense, we think that feedback from translators or post-editors provides useful information for improving MT systems and their acceptability in the subtitling market (AVTE, 2021; Bywood et al., 2017; Koponen et al., 2020). In addition, owing to their training and professional experience, translators are best prepared to examine the human aspects of translation technology (Bywood et al., 2017; Pym, 2012;). We believe that the translators’ perceptions are essential to removing errors from or decreasing their number in the MT output; their perceptions can include linguistic analysis, the editing of MT errors, and pattern recognition.

Bearing that in mind, we have investigated whether using MTPE would influence the quality of interlingual subtitles. Based on this research question, we aimed to analyse the acceptability of MTPE subtitles by taking into consideration translators’ perceptions about their quality. More specifically, we analysed the perceived quality of post-edited interlingual subtitles using data collected from an online study with Brazilian professional translators. We also examined which technical and linguistic parameters[i] were considered troublesome and whether translators would report any evidence that the subtitles used in the study were machine-translated and post-edited. The research was developed within the scope of Research Group Translation and Technologies (GETRADTEC) at the Federal University of Pernambuco, Brazil, a research group that currently investigates aspects of the quality and reception of machine-translated and post-edited interlingual subtitles.

This article presents the results of an experiment about the quality of MTPE subtitles and comprises the following sections: in section 2, we present the Related work, a compilation of articles regarding the quality assessment of machine-translated and post-edited interlingual subtitles. In section 3, we explain the methodological aspects of our data collection and data analysis. In section 4, the data are analysed and discussed. Section 5 contains our final remarks.

2. Related work

The application of MT to subtitling began to occur late in comparison to its integration into the localization sector. According to Bywood et al. (2017), this happened because subtitling first presents itself as an open domain that can deal with different areas of interest and diverse vocabularies. Secondly, the source text (ST) in subtitling has its own grammar because it is a written representation of spoken language (Burchardt et al., 2016; Volk, 2008). Despite such challenges, MT has gradually been integrated into the subtitling workflow. Yet it should be stressed that post-editing (PE) is necessary where MT is used to produce a translation output that is later revised by a translator (Koponen et al., 2020). This is necessary because MT still requires a detailed revision to ensure that the output is accurate and of a high quality. In addition, studies focusing on the subtitlers or post-editors’ perceptions of the MTPE quality can contribute to the academic field, to the professionals’ training, and consequently to the AVT industry. As indicated by Bywood et al. (2017):

There may be value in looking at the profiles of each of the subtitlers working on the evaluation in order to identify trends that are linked to factors such as experience, speed, and ways of working. (p. 501)

Etchegoyhen et al. (2014) have reported on a large-scale evaluation of the use of statistical MT (SMT) systems for professional subtitling. Within the scope of the SUMAT project, two series of assessments were carried out: a quality evaluation and a measure of the productivity gain and/or loss. The authors submitted the subtitles to the evaluation of subtitlers, correlating the professionals’ scores and feedback with the SMT systems developed in the SUMAT project and automated metrics computed on the post-edited files. Furthermore, recurrent MT errors were collected to upgrade the systems gradually. The results of the study by Etchegoyhen et al. (2014) showed that most of the machine-translated subtitles received good quality ratings and “promising results in terms of quality and usefulness for a professional use applied to the variety of domains and genres found in subtitling” (p. 47).

In the same project, Georgakopoulou and Bywood (2014) presented a linear study of machine-translated subtitles that values the interaction between industry, MT systems, and human beings. This relationship arises from the relevance of observing what would be good enough to enable the audience to understand the translated product. In this process professional subtitlers will need to be trained as post-editors; alternatively, professional post-editors from the text translation industry will need to be trained as subtitlers (Georgakopoulou & Bywood, 2014).

The pivotal role of professional subtitlers in the AVT industry goes beyond their daily work as audiovisual translators. Several studies (e.g., Robert & Remael, 2016; Szarkowska et al., 2021) have observed that professionals are best suited to assessing subtitling quality because of their extensive technical and practical knowledge. In this regard, Robert and Remael (2016) investigated the quality control and quality assurance of interlingual subtitles by exploring the contributions of subtitlers to subtitling quality. Regarding the technical parameters, the subtitlers agreed that they adhered strictly to the style manuals and in their perception the main factors that affected quality were content, grammar, readability, and contextual appropriateness.

Following this line of thought, Szarkowska et al. (2021) proposed an experiment to evaluate the indicators of subtitling quality as viewed by the audience and subtitlers, based on two quality parameters: subtitle display rates and line breaks. The two groups of participants agreed that quality is a key factor in subtitling. Their findings show some similarities and differences, particularly as regards the two groups’ views about the condensation strategy. Many viewers criticized subtitles that do not have all the content from the original dialogue because it makes them feel perplexed and confused when they can hear one thing but find themselves reading something else. For their part, professionals consistently associate good-quality subtitles with a high degree of text condensation. These results can contribute to defining the quality parameters of subtitles in order to improve the quality of both their production and their reception.

To assess the quality of interlingual subtitling, Pedersen (2017) developed a model that focused on evaluating the final product (the subtitles). Pedersen’s model is based on error analysis in which for each error detected a penalty point was assigned. FAR is the acronym for functional equivalence, acceptability, and readability – the three main categories assessed by the model. The score varies according to the severity of an error, the errors being subcategorized as minor, standard, and serious. The model has some weaknesses, including the fact that it is prone to subjectivity when assessing idiomaticity and equivalence errors, and it is based on error analysis, that is to say, it does not reward excellent solutions.

Regarding quality in human and machine-generated subtitles, Doherty and Kruger (2018) examined the ways in which new technologies and the diversity of new media are testing and influencing the notion of quality. The authors identified the fields in which quality is in jeopardy in AVT due to “the complexity of AVT compared to traditional, static text, and the need to quantify dimensions that are inherently qualitative” (Doherty & Kruger, 2018, p. 182). The authors concluded that the visibility of quality assessment in AVT appears to be increasing in both academia and industry.

Concerning the PE of automatic subtitles, Karakanta et al. (2022) conducted a study that investigated the impressions and feedback of professional subtitlers who experienced PE with automatically generated subtitles. Their analysis of

the open questions showed that the main issues of automatic subtitling stem from failures in speech recognition and pre-processing, which result in propagation of errors, translations out of context, inaccuracies in auto-spotting and suboptimal segmentation (Karakanta et al., 2022, p. 9).

The subtitlers faced some pre-processing problems that led to errors. However, they evaluated positively the provision of useful suggestions, speed, and effort-reduction related to the technical parameters, which can lead to a better quality of subtitles.

Employing the English-German machine-translated subtitles from the SubCo corpus, a corpus that includes both human and machine-translated subtitles, Brendel and Vela (2022) presented “a novel approach for assessing the quality of machine-translated subtitles” (p. 52). This new method of assessment is based on annotation and error-evaluation schemes performed manually and applied to four categories: content, language, format, and semiotics. Karakanta (2022) showed the difficulties involved in carrying out a quality investigation based on human assessment due to its subjectivity and cost and also because it is time-consuming. Although Karakanta (2022) considers that the human evaluation of MT subtitles across all development stages and for every system is not feasible, she also recognizes that assessing quality using string-based metrics – that is, metrics based on similarities and the distance between two texts (e.g., BLEU, NIST, TER, and chF2) – is insufficient to represent “the functional equivalence required in creative and multimodal texts such as subtitles” (Karakanta, 2022, p. 7). That is why human evaluation emerges as a predominant factor, once it “is considered the primary type of evaluation for any technology, given that automatic metrics often do not fully correlate human judg[e]ments/effort” (Karakanta, 2022, p. 8). To fill this gap, in addition to the human evaluation of subtitles, the approach of Brendel and Vela (2022) also suggests measuring the inter-annotator agreement of the human error annotation and assessment, plus estimating PE effort. The aim of Brendel and Vela’s method is to provide detailed information about the quality of machine-translated subtitles by finely detecting errors and weaknesses in the MT engine.

Considering the relationship between MTPE subtitling, quality assessment, and audience reception, the study carried out by Koglin et al. (2022) combined the human evaluation of MTPE subtitles and the error scores of the FAR model. The study reports on two pilot experiments: one about the quality and the other about the reception of MTPE interlingual subtitles. They were conducted with translators and undergraduate students from the Linguistics and/or Languages Department, respectively. Data analysis suggested that regarding meaning and target language norms, the MTPE subtitles were of a good quality. However, the technical parameters of the subtitling were of a lower quality, affecting the trailer viewing, as indicated by most of the participants in that study. Despite that, the general subtitling quality was unaffected, as shown by the correlation of human evaluation (i.e., translators and undergraduate students) and the error categorizations of the FAR model. Following this, the pilot experiment on quality reported in Koglin et al. (2022) was then conducted with a larger number of participants, as detailed in the following section.

3. Methodology

This study focuses on the translation product and analyses the perceived quality of MTPE interlingual subtitles using data collected from Brazilian professional translators in order to investigate whether the use of MT has an impact on the perceived quality of interlingual subtitles. The assessment was performed using a 5-point Likert-type scale and the participants evaluated the subtitles according to their own perceptions. The methodological aspects are detailed in the following subsections.

3.1 Data collection

3.1.1 Material

The material we used is the film trailer The Red Sea Diving Resort – Missão no Mar Vermelho in Brazilian Portuguese – (Raff, 2019), directed by Gideon Raff and released by the streaming platform Netflix in 2019. The trailer is of 2 min 21 s duration and was selected from the Netflix Brazil catalogue in February 2020. The film was inspired by the events of Israel’s Operation Moses and Operation Joshua (1984–1985).

The film trailer was selected based on three criteria: first, it had to be subtitled in the English–Brazilian Portuguese (EN/PT–BR) language pair; second, it had to be available as subtitled in the Netflix Brazil catalogue; and, finally, the same trailer published in the Netflix catalogue had to be available for downloading on the internet without subtitles, so that the dialogues could be machine-translated and post-edited into PT–BR.

3.1.2 Subtitling procedures

At first, all the trailer dialogue was transcribed. Next, the original dialogue was machine-translated and post-edited using the software Subtitle Edit (version 3.5.1), which contains professional resources to create, adjust, and synchronize subtitles. In addition, this software allows MT to be incorporated and this was carried out in our study using a Google API Key application integrated into Subtitle Edit. After being machine-translated, the subtitles were post-edited by a post-editor and revised by a proofreader. At the time of the experimental design, the post-editor had had formal training and three years of experience in post-editing, one year of experience in subtitling and more than ten years’ experience in translating texts from other domains. The proofreader had formal training in subtitling and less than two years of experience in translation.

Regarding the PE brief, both the post-editor and the proofreader were instructed to revise the MT output and follow the Brazilian Portuguese Timed Text Style Guide in doing so (Netflix, 2023). Concerning the PE guidelines, the post-editor was asked to carry out a full PE according to the ISO 18587:2017 standard, “Translation services – Post-editing of machine translation output – Requirements” (ISO, 2017). We applied this standard, which presents general requirements for MTPE, since specific guidelines or ISO standards for dealing with the MTPE of interlingual subtitling are not available at this time. Both professionals were also told to perform adjustments to the automatically estimated timestamps and the line breaks. However, they were not explicitly instructed to change the font size and the font colour.

3.1.3 Participants

The participants in this study were 68 professional Brazilian translators who volunteered to take part in an online experiment. They were all native speakers of Brazilian Portuguese (first criterion) and considered English as their second language (second criterion). The first participant responded to the survey on 16 April 2021 and the last participant on 23 May 2022.

It should be noted that one of the main challenges of conducting empirical experimental research with translators in Brazil is the fact that Brazil’s legislation does not allow researchers to pay for participants to take part in an experiment. Consequently, the translators have to stop their paid work and participate voluntarily. A major consequence of this scenario is the difficulty of recruiting participants who, sometimes, do not fully fit the expected participant profile or do not have sufficient professional experience to evaluate quality, for instance. For this reason, the results of this research may not be generalizable, because our sample was not homogeneous according to professional experience.

3.1.4 Experimental design

The experiment was conducted entirely online due to the covid-19 pandemic. First, all the participants filled out a prospective questionnaire prepared in Google Forms. This questionnaire was used to collect the participants’ personal and professional information related to their working languages, their level of proficiency, their preference concerning AVT modes (subtitling or dubbing), and their preferred place for watching subtitled movies and series.

After that, they accessed a link to watch the trailer – The Red Sea Diving Resort (Raff, 2019) – with post-edited subtitles and then assessed their quality globally by means of a 5-point Likert-type scale (from 5 Very satisfied to 1 Unsatisfied – see Appendix A). It is worth mentioning that the participants did not receive any further instructions about how they should interpret quality. Finally, the participants responded to a written verbal protocol, which consisted of four open questions (see Appendix B). Next, all the data were tabulated and analysed, as detailed in the following subsection.

3.2 Data Analysis

The analysis relies on qualitative and quantitative data, which were triangulated. Quantitative analysis focused on data from the 5-point Likert-type scale, the prospective questionnaire and the written verbal protocols. The data collected through these instruments were all tabulated in an Excel spreadsheet. Regarding the written verbal protocols, all the answers were categorized in an Excel spreadsheet according to the subtitles’ technical parameters and linguistics parameters, as had been reported on freely by the participants. Although the participants were given some suggestions of linguistic and technical parameters (see questions 2 and 3 in Appendix B), they could discuss any parameter they wanted to. All the quantitative data received statistical treatment and were run on IBM SPSS Statistics.

The written verbal protocols were also used for qualitative analysis, that is, the participants’ answers were used to explain and describe how Brazilian professional translators perceive the quality of MTPE subtitles and how they assess them.

4. Results and discussion

For the purposes of this article, the analysis of the quality assessments of the interlingual post-edited subtitles focuses on both quantitative and qualitative data originating from the Likert-type scale, the verbal written protocol and the prospective questionnaire. Of the 68 participants, 64 were considered eligible for data analysis. One participant was excluded from data analysis for not being a native speaker of PT–BR; another was removed due to an inconsistency found in their Likert-scale rating and their verbal report; and two others for revealing that they did not have access to the audio of the trailer.

The age range of most of the participants was 18–30 (42.2%), with 70.3% identified as women and 29.7% as men. Concerning the participants’ professional profile, fewer than half of the sample (43.7%) comprised subtitlers. The other participants worked with literary translation, technical translation, and localization. A Chi-Square test was performed to test whether there was an association between the type of translation performed by the participants and their quality assessment. However, there was not a significant relationship between the two variables, X2(51, n = 64) = 50.14, p = .508.

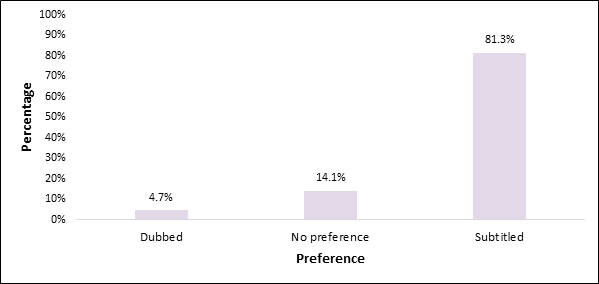

Figure 1 provides an overview of the participants’ preference regarding the AVT modes (subtitling or dubbing) used when watching content that is not in their native language, that is, Brazilian Portuguese.

Figure 1

Participants’ preference regarding AVT transfer modes

As can be seen in Figure 1, the vast majority of the participants (81.3%) preferred to watch subtitled movies, whereas 14.1% had no preference for either dubbed or subtitled movies. This result is consistent with an increasing worldwide trend of watching English-spoken films with subtitles (Birulés-Muntané & Soto-Faraco, 2016) and, similarly to those of Szarkowska et al. (2021), which were that most participants selected subtitling (77%) when asked about their preferred type of AVT. These results are also similar to those reported by Kuşçu-Özbudak (2022), where six out of nine participants preferred to watch films with interlingual subtitles.

Furthermore, those results could partly be explained by the subtitling tradition in Brazil. As reported by Freire (2015), subtitling was growing in popularity in the country during the 1930s; and at the end of that decade subtitling had been “established as the standard for adjusting foreign prints for the Brazilian market” (p. 208).

In addition, considering that only 43.7% of our sample consisted of subtitlers, it could be helpful to have this kind of information about the participants because it might reveal their positive attitude to subtitling, our object of analysis. Besides that, even if they were not subtitlers, these kinds of data show they are at least familiar with subtitles as viewers.

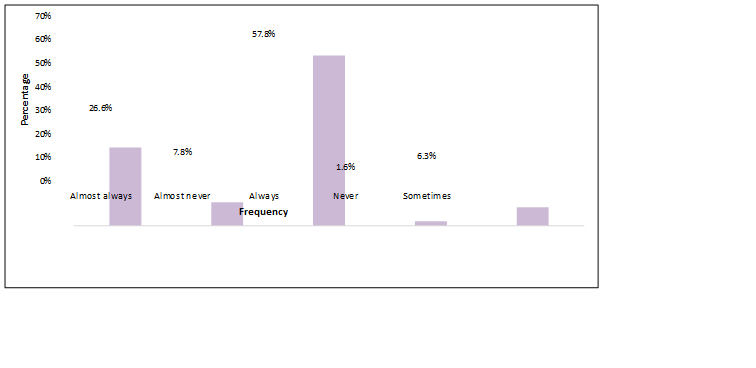

The participants were also asked how often they watched subtitled films and/or series, which can be seen in Figure 2.

Figure 2

Participants’ frequency of watching subtitled films and/or series

|

Figure 2 shows that more than half the sample (57.8%) reported that they always watch subtitled films, followed by 26.6% that they almost always choose this mode of AVT. This result is similar to the findings of Kuşçu-Özbudak (2022), where six out of nine participants always preferred Turkish subtitles.

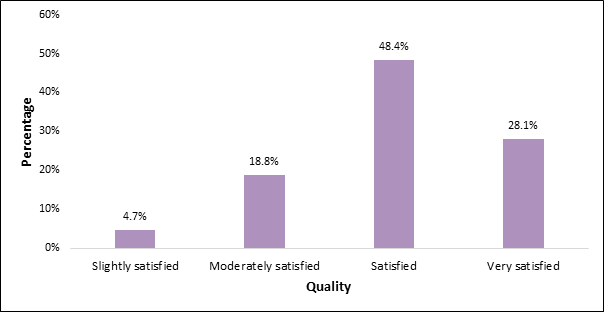

As part of our research, the participants were asked to evaluate the quality of post-edited interlingual subtitles. The results generated by applying a 5-point Likert-type scale are shown in Figure 3.

Figure 3

Translators’ assessment of MTPE

subtitles

As can be seen from Figure 3, the results regarding the use of MTPE subtitles seem encouraging: 18 out of 64 translators (28.12%) were very satisfied with the MTPE subtitles, followed by 31 (48.44%) who were satisfied. Only three participants out of 64 were slightly satisfied. This overall positive evaluation of the MTPE subtitles is consistent with the results of previous research that investigated the relationship between MTPE, human translation, and quality in different domains (Carl et al., 2011; Daems et al., 2013; García, 2011; Guerberof Arenas, 2008; Läubli et al., 2013; Plitt & Masselot, 2010; Screen, 2017; Skadiņš et al., 2011). These studies showed that the use of full PE does not necessarily lead to translation of poorer quality.

In addition, our result is in agreement with the study conducted by Karakanta et al. (2022) that collected subtitlers’ impressions and feedback on the use of automatic subtitling in their workflows. Their findings have shown that “automatic subtitling is seen rather positively and has potential for the future” (Karakanta et al., 2022, p. 1).

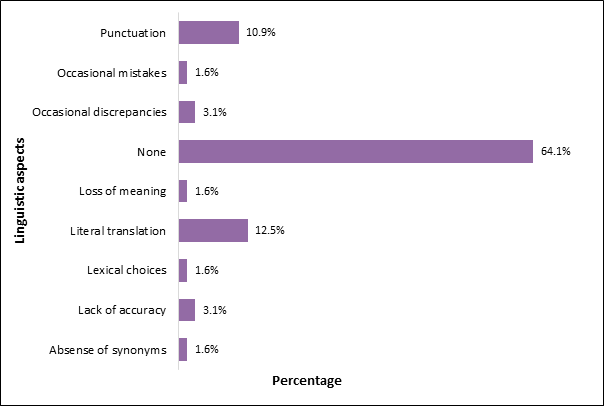

According to Doherty and Kruger (2018), even though each AVT mode has its own quality considerations and assessment, there is consensus that a central part of quality assessment is the linguistic information of the audiovisual text and the way it was translated. Therefore, in order to gain a deeper understanding of the quality of the post-edited subtitles, our participants were asked to explain whether there was any linguistic aspect that affected their comprehension and viewing of the movie trailer. Their responses are shown in Figure 4.

Figure 4

Linguistic problems with

the MTPE subtitles

Consistent with the overall positive quality assessment, the vast majority of the translators (64.1%) reported that no linguistic aspect had affected their viewing experience. Participant 56, for instance, who is an experienced subtitler, stated that the “translation choices were good considering the necessity of summarizing information to meet the demands of space constraint, especially in a movie like this one with fast-paced dialogues”.[ii]

A small percentage of the participants (12.5%) thought that sometimes the translation was too literal. Participant 25, who has more than 10 years’ experience working as a subtitler, stated: “Many subtitles could be more concise. The translation was too literal.”[iii] Although this participant has not explicitly linked the literal translation to the fact that subtitles were machine-translated and post-edited, this could be a possible explanation for literal translation in a medium that is characterized by text condensation (Díaz Cintas & Remael, 2020). This explanation finds support in the study of Karakanta et al. (2022), in which the surveyed subtitlers reported that automatic translation tends to be a bit too literal.

Furthermore, the perception of literal translation could also be explained by the priming effect of the raw MT output on the post-editor’s lexical and syntactic choices. In a study conducted on the PE of literary texts, Castilho and Resende (2022) found out that the PE proximity to the MT output may result in the writers’ style being distorted and the final product being influenced. In addition to that, an investigation conducted by Toral (2019) about the manifestation of translationese and post-editese found that post-edited texts tend to be simpler and have a higher degree of interference from the source language when compared to human translation.

In addition, because the application of MTPE to subtitling is a “critical and somewhat controversial issue amongst professionals” (Mangiron, 2022, p. 419), we have also examined whether translators would report any verbal evidence that the subtitles were machine-translated. Although the participants were not explicitly informed about the use of MT during the experiment, the consent form mentioned that this study was part of a larger project entitled Audiovisual Translation Assisted by Machine Translation: An Empirical-Experimental Study about Quality and Reception. Besides that, question number 4 of the written verbal protocol was open and allowed them to comment freely about any other aspect not covered by questions 1, 2 and 3 (see Appendix B).

In their answers to the verbal written protocols, the participants mentioned some problems – a lack of accuracy, the use of literal translation, unnatural subtitles – which could be the result of using MTPE. Participant 08, for example, reported that

most part of the text is the result of a word-for-word translation. That is why sometimes the dialogues seem unnatural. If there were a little bit more of the translator’s voice, the subtitles might sound more natural.[iv]

Despite the supposedly linguistic evidence of MT, no participant explicitly affirmed that the subtitles were machine-translated and post-edited.

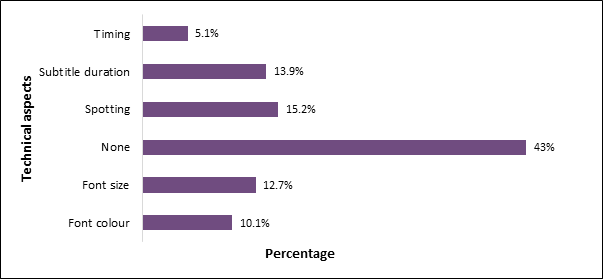

Our experimental design has also included a question in the verbal written protocol about presentation and time parameters (i.e., number of lines per subtitle, font colour, font size, subtitle duration, timing), which are restrictions inherent in subtitling, as Doherty and Kruger (2018) explain. All the participants were asked the following question: “Is there any technical aspect that affected your comprehension and viewing of the movie trailer?” Figure 5 shows the percentage of each problem related to the technical subtitling parameters.

Figure 5

Technical problems in the

MTPE subtitles

As can be seen from Figure 5, less than half of the sample (43%) reported no technical problems whereas 22.8% commented on problems with the font colour and the font size. Spotting (15.2%) and subtitle duration (13.9%) were also found to affect their viewing of the movie trailer. This result illustrates the importance of taking into account both linguistic and technical variables when analysing the assessment of quality in MTPE subtitling.

Similarly to the pilot study results reported by Koglin et al. (2022), it is possible to observe that the quality of the MTPE subtitles as judged by meaning and target language norms scored better when the percentage of no technical issues is compared to the percentage related to linguistic problems. This result provides an indication of the complexity of assessing the quality of interlingual subtitles: the variables involved are interdependent and the technical requirements affect the degree to which a subtitle is condensed or not. In other words, changes in an MTPE subtitle will possibly require adjustments to the automatically estimated timestamps or line breaks. Karakanta (2022) states this as follows:

As a result, assessing quality becomes a problem composed of multiple variables, some of which may be weighted more heavily than others. For example, is a perfect translation with implausible line breaks which is presented at a fast reading speed better than an erroneous translation with proper breaks and comfortable reading speed? (p. 17)

The answer to this question is not as straightforward as it may seem. On the one hand, when we consider the comparison of linguistic and technical problems in our study, there seems to be a greater impact of the technical problems on quality perception. However, this could in part be explained by the fact that in our study the post-editors were told to perform adjustments to the automatically estimated timestamps or line breaks but they were not explicitly instructed to change the font size and the font colour; as a result, they might have assumed they were already approved by the client. However, it is worth noting that in some cases technical problems can affect linguistic processing and comprehension, as highlighted by a professional subtitler surveyed in a study conducted by Szarkowska et al. (2021). For this professional, reading speed is a determinant of subtitle quality. Although, from an audience perspective, poor subtitles are often associated with bad translations, this professional subtitler associates poor subtitles with literal translations – in other words, subtitles that are not condensed enough and “lead to absurd reading speeds, which means you can no longer watch the programme since you’re just reading the subtitles” (Szarkowska et al., 2021, pp. 8–9).

On the other hand, in a study conducted by Robert and Remael (2016) with 99 professional subtitlers, the participants admitted that they had followed the technical guidelines, but in their opinion the most important parameters affecting quality were content, grammar, readability, and contextual appropriateness. Although the results of this study indicate that these types of error play an important role in the quality assessment of subtitling, the verbal feedback provided by the participants in our study suggests that technical parameters may be equally important, given the interdependence of both in creating a pleasant viewing experience. This result is also in line with the answers provided by the translators who participated in an experiment carried out by Koponen et al. (2020). The data collected through a questionnaire and an interview indicated that the segmentation and timing of MT subtitles were identified as the main problems, in addition to the general quality of the MT output (Koponen et al., 2020).

In our study, Participant 27 expressed their annoyance at the subtitle speed, which interfered with both movie comprehension and viewing. This participant mentioned that “... sometimes [they] had to choose between the subtitle and the image”.[v] Font colour (and size) and timing were also pointed out as being matters that affected the viewing experience.

When asked about the technical parameters that could have affected movie comprehension and appreciation, Participant 45 stated:

Font colour[vi] and duration. I believe that sometimes subtitles were too fast and sometimes too slow considering the ideal duration that is necessary to provide good readability for myself as both the trailer viewer and consumer.[vii]

Both statements are consistent with the perceptions found by Szarkowska et al. (2021) in their study about subtitling quality with a cohort of professional subtitlers. A crucial element in the quality assessment of interlingual subtitles is the subjective nature of the concept. As stated by Mangiron (2022), “what some users find acceptable, others do not. … In addition, the concept of quality changes through time, as technology evolves, and users’ preferences change” (p. 418). Bearing that in mind and the varied technical parameters of subtitling adopted in different media, we examined whether the quality assessment of our participants could be affected by the different media they consume when they watch subtitled movies and series.

A Chi-Square test was performed to verify whether there was an association between the different media (i.e., Netflix, cable TV, cinema) and the participants’ quality assessments. There was in fact a significant relationship between the two variables, X2(33, n = 64) = 56.81, p = .006: the highest rating on the Likert-type scale was given by translators who regularly watch movies on Netflix. This result might have been influenced by their familiarity with the Netflix parameters as viewers. As highlighted by Szarkowska et al. (2021):

New technical developments in the industry and the greater exposure of audiences to subtitles are having a direct impact in the increase in subtitles presentation speeds, that are going up to 17 cps, and the maximum number of characters allowed per line, extending on occasions to 42, which in turn reflect less condensation of the target text. (p. 668)

Regarding the reading speed, the Netflix style guide for Brazilian Portuguese recommends 17 characters per second and a maximum of 42 characters per line (Netflix, 2023). This guideline exceeds the average rate[viii] used in Brazilian television, cinema, VHS, and DVDs (Campos & Azevedo, 2020); therefore it may have an impact on the translators’ perception of quality if they are not familiar with Netflix guidelines.

5. Final Remarks

This research aimed to investigate the quality of MTPE subtitles through a study of the perceptions of Brazilian professional translators. Their overall perception was positive and they seem to have accepted the use of MTPE in interlingual subtitling since most of the participants assessed the quality of the subtitles as either very satisfactory (n = 18) or satisfactory (n = 31) and none of them assessed the subtitles as unsatisfactory. These results may indicate acceptance of using MTPE in interlingual subtitles.

In order to answer our research question regarding whether MTPE influences the quality of interlingual subtitles, we analysed the professional translators’ feedback according to both linguistic and technical aspects. As far as the linguistic aspects are concerned, the translators were asked about any problem that could have had an impact on how well they understood and enjoyed the film trailer. A small number of the participants reported negatively about some aspects, such as the lack of accuracy, the use of literal translation, and unnatural subtitles. We believe that this feedback might be explained in part by the PE proximity to the MT output, which may have resulted in the distortion of the writers’ style and have influenced the final product, as reported by Castilho and Resende (2022). In addition, the MTPE subtitles might have contained those problems as a result of the post-editor’s and proofreader’s lack of experience and formal training in this field. In this regard, the conclusion of both industry and academia should be highlighted about the importance of training subtitlers as post-editors or taking “the trained post-editors from the text translation industry where they have been working for many years and train them as subtitlers” (Georgakopoulou & Bywood, 2014, “The subtitling post-editor” section). As mentioned by Georgakopoulou and Bywood (2014, “The subtitling post-editor” section), it is clear “that professionals in subtitle post-editing roles need subtitling-specific skills also, with all their domain-specific features”.

Similar results were found when the participants were asked about the technical aspects that could have affected their viewing experience. Fewer than half of the Brazilian translators indicated that they had experienced no technical problems, and some participants reported problems with font colour and font size. They also stated that spotting and subtitle duration had an impact on the extent to which they enjoyed the movie trailer. These results highlight the importance of considering both linguistic and technical parameters when examining the quality of MTPE subtitling.

However, it is important to emphasize that our study was conducted on a film trailer – a short audiovisual product with specific language – which focuses on advertising and publicizing the film. Perhaps experiments carried out with MTPE subtitling of longer audiovisual products, such as films, documentaries, or series, could produce different findings due to their length and the construction of the scenes. Further studies should also take into account the genre of the audiovisual product in order to examine whether the MTPE subtitling quality of fictional films and series differs from the MTPE subtitling quality of reality shows and documentaries, for example.

The data collected and analysed in this article indicate a way of carrying out more research of this nature and forming a foundation for future studies based on MT and its relationship with the quality of MTPE subtitles. In addition to the standards established as requirements for subtitling, the focus on continuous quality assurance is important because of the growing demand for subtitled products (Campos & Azevedo, 2020; Doherty & Kruger, 2018; Kuşçu-Özbudak, 2022). However, the quality standards should take into account the technical parameters and also consider viewer appraisals of reception. Research that focuses on perceptions of quality are able to contribute knowledge that could be useful in bringing about improvements in the sector.

Acknowledgement

We would like to thank the reviewers for their careful reading and thoughtful comments that helped us to refine this article.

References

Athanasiadi, R. (2017). Exploring the potential of machine translation and other language assistive tools in subtitling: A new era? In M. Deckert (Ed.), Audiovisual translation: Research and use (pp. 29–49). Peter Lang.

Audiovisual Translators Europe (AVTE). (2021). AVTE Machine translation manifesto. Retrieved from http://avteurope.eu/wp-content/uploads/2021/09/Machine-Translation-Manifesto_ENG.pdf

Baños Piñero, R., & Díaz Cintas, J. (2015). Audiovisual translation in a global context. In R. Baños Piñero & J. Díaz Cintas (Eds.), Audiovisual translation in a global context: Mapping an ever-changing landscape (pp. 1–10). Palgrave Macmillan. https://doi.org/10.1057/9781137552891_1

Birulés-Muntané, J., & Soto-Faraco, S. (2016). Watching subtitled films can help learning foreign languages. PLoS One, 11(6), 1–11. https://doi.org/10.1371/journal.pone.0158409

Brendel, J., & Vela, M. (2022). Quality assessment of subtitles: Challenges and strategies. In P. Sojka, A. Horák, I. Kopeček, & K. Pala (Eds.), Text, speech, and dialogue (pp. 52–63). Springer. https://doi.org/10.1007/978-3-031-16270-1_5

Burchardt, A., Lommel, A., Bywood, L., Harris, K., & Popović, M. (2016). Machine translation quality in an audiovisual context. Target, 28(2), 206–221. https://doi.org/10.1075/target.28.2.03bur

Bywood, L., Etchegoyhen, T., & Georgakopoulou, P. (2017). Embracing the threat: Machine translation as a solution for subtitling. Perspectives: Studies in translation theory and practice, 25(3), 492–508. https://doi.org/10.1080/0907676X.2017.1291695

Bywood, L. (2020). Technology and audiovisual translation. In Ł. Bogucki & M. Deckert (Eds.), The Palgrave handbook of audiovisual translation and media accessibility (pp. 503–517). Palgrave Macmillan. https://doi.org/10.1007/978-3-030-42105-2_25

Campos, G. C., & Azevedo, T. A. (2020). Subtitling for streaming platforms: New technologies, old issues. Cadernos de Tradução, 40(3), 222–243. https://doi.org/10.5007/2175-7968.2020v40n3p222

Carl, M., Dragsted, B., Elming, J., Hardt, D., & Jakobsen, A. L. (2011). The process of post-editing: A pilot study. In B. Sharp, M. Zock, M. Carl, & A. L. Jakobsen (Eds.), Proceedings of the 8th International NLPCS Workshop (pp. 131–142). Samfundslitteratur.

Carvalho, S. R. S., & Seoane, A. F. (2019). A relação entre unidades de velocidade de leitura na legendagem para surdos e ensurdecidos. Transversal – Revista em Tradução, 5(9), 137–153.

Castilho, S. (2011). Automatic and semi-automatic translation Of DVD subtitle: Measuring post-editing effort. [Unpublished master’s thesis]. University of Wolverhampton.

Castilho, S., & Resende, N. (2022). Post-editese in literary translations. Information, 13(2), 1–22. https://doi.org/10.3390/info13020066

Daems, J., Macken, L., & Vandepitte, S. (2013). Quality as the sum of its parts: A two-step approach for the identification of translation problems and translation quality assessment for HT and MT+PE. In S. O’Brien, M. Simard, & L. Specia (Eds.), Proceedings of the 2nd Workshop on post-editing technology and practice (WPTP-2) (pp. 63–71). European Association for Machine Translation. https://aclanthology.org/2013.mtsummit-wptp.8/

Díaz Cintas, J. (2013). The technology turn in subtitling. In M. Thelen & B. Lewandowska-Tomaszczyk (Eds.), Translation and meaning (Part 9, pp. 119–132). Maastricht School of Translation and Interpreting.

Díaz Cintas, J., & Massidda, S. (2020). Technological advances in audiovisual translation. In M. O’Hagan (Ed.), The Routledge handbook of translation and technology (pp. 255–270). Routledge. https://doi.org/10.4324/9781315311258-15

Díaz Cintas, J., & Remael, A. (2020). Subtitling: Concepts and practices. Routledge. https://doi.org/10.4324/9781315674278

Doherty, S., & Kruger, J. L. (2018). Assessing quality in human and machine-generated subtitles and captions. In J. Moorkens, S. Castilho, F. Gaspari & S. Doherty (Eds.), Translation quality assessment: From principles to practice (pp. 179–197). Springer. https://doi.org/10.1007/978-3-319-91241-7_9

Etchegoyhen, T., Bywood, L., Fishel, M., Georgakopoulou, P., Jiang, J., & Loenhout, G. (2014). Machine translation for subtitling: A large-scale evaluation. In Proceedings of the Ninth International Conference on Language Resources and Evaluation (LREC'14), (pp. 46–53). https://aclanthology.org/L14-1392/

Freire, R. L. (2015). The introduction of film subtitling in Brazil (Trans. R. I. Pessoa). MATRIZes, 9(1), 187–210. https://doi.org/10.11606/issn.1982-8160.v9i1p187-211

García, I. (2011). Translating by post-editing: Is it the way forward? Machine translation, 25(3), 217–237. https://doi.org/10.1007/s10590-011-9115-8

Georgakopoulou, P. (2019). Technologization of audiovisual translation. In L. Pérez-González (Ed.), The Routledge handbook of audiovisual translation (pp. 516–539). Routledge. https://doi.org/10.4324/9781315717166-32

Georgakopoulou, P., & Bywood, L. (2014). MT in subtitling and the rising profile of the post-editor. Multilingual, 25(1), 24–28.

Guerberof Arenas, A. (2008). Productivity and quality in the post-editing of outputs from translation memories and machine translation. Localization Focus The International Journal of Localization, 7(1), 11–21. https://core.ac.uk/download/pdf/228161879.pdf

International Organization for Standardization. (2017). Translation services—Post-editing of machine translation output—Requirements (ISO Standard No. 18587:2017). https://www.iso.org/standard/62970.html

Karakanta, A. (2022). Experimental research in automatic subtitling: At the crossroads between machine translation and audiovisual translation. Translation Spaces, 11(1), 89–112. https://doi.org/10.1075/ts.21021.kar

Karakanta, A., Bentivogli, L., Cettolo, M., Negri, M., & Turchi, M. (2022). Post-editing in automatic subtitling: A subtitlers’ perspective. Proceedings of the 23rd Annual Conference of the European Association for Machine Translation, 261–270. https://aclanthology.org/2022.eamt-1.29

Koglin, A. (2008). A tradução de metáforas geradoras de humor na série televisiva Friends: Um estudo de legendas [Master’s dissertation, Federal University of Santa Catarina]. UFSC Institutional Repository. https://repositorio.ufsc.br/xmlui/handle/123456789/90889

Koglin, A., Silveira, J. G. P., Matos, M. A., Silva, V. T. C., & Moura, W. H .C. (2022). Quality of post-edited interlingual subtitling: FAR model, translator's assessment and audience reception. Cadernos de Tradução, 42(1), 1–26. https://doi.org/10.5007/2175-7968.2022.e82143

Koponen, M., Sulubacak, U., Vitikainen, K., & Tiedemann, J. (2020). MT for subtitling: Investigating professional translators’ user experience and feedback. Proceedings of the 14th Conference of the Association for Machine Translation in the Americas, 79–92. https://helda.helsinki.fi/handle/10138/324207

Kuşçu-Özbudak, S. (2022) The role of subtitling on Netflix: An audience study. Perspectives: Studies in Translation Theory and Practice, 30(3), 537–551. https://doi.org/10.1080/0907676X.2020.1854794

Läubli, S., Fishel, M., Massey, G., Ehrensberger-Dow, M., & Volk, M. (2013). Assessing post-editing efficiency in a realistic translation environment. Proceedings of MT Summit XIV Workshop on Post-editing Technology and Practice, 83–91. https://doi.org/10.5167/uzh-80891

Mangiron, C. (2022). Audiovisual translation and multimedia and game localisation. In F. Zanettin & C. Rundle (Eds.), The Routledge handbook of translation and methodology (pp. 410–424). Routledge. https://doi.org/10.4324/9781315158945-29

Matusov, E., Wilken, P., & Georgakopoulou, P. (2019). Customizing neural machine translation for subtitling. Proceedings of the Fourth Conference on Machine Translation (WMT): Research Papers, 82–93. https://doi.org/10.18653/v1/W19-5209

Netflix (2023). Brazilian Portuguese timed text style guide. https://partnerhelp.netflixstudios.com/hc/en-us/articles/215600497-Brazilian-Portuguese-Timed-Text-Style-Guide

Pedersen, J. (2017). The FAR model: Assessing quality in interlingual subtitling. The Journal of Specialised Translation, 28, 210–229. https://jostrans.org/issue28/art_pedersen.pdf

Plitt, M., & Masselot, F. (2010). A productivity test of statistical machine translation post-editing in a typical localisation context. The Prague Bulletin of Mathematical Linguistics, 93, 7–16. https://ufal.ms.mff.cuni.cz/pbml/93/art-plitt-masselot.pdf

Pym, A. (2012). Democratizing translation technologies: The role of humanistic research. In V. Cannavina & A. Fellet (Eds.), Language and Translation Automation Conference (pp. 14–29). The Big Wave. http://hdl.handle.net/11343/194362

Raff, G. (Director). (2019). The Red Sea Diving Resort [Film]. Bron Studios; EMJAG Productions; Shaken Not Stirred; Creative Wealth Media Finance; Searchlight Pictures.

Robert, I., & Remael, A. (2016). Quality control in the subtitling industry: An exploratory survey study. Meta, 61(3), 578–605. https://doi.org/10.7202/1039220ar

Screen, B. (2017). Productivity and quality when editing machine translation and translation memory outputs: An empirical analysis of English to Welsh translation. Studia Celtica Posnaniensia, 2(1), 119–142. https://doi.org/10.1515/scp-2017-0007

Skadiņš, R., Puriņš, M., Skadiņa, I., & Vasiljevs, A. (2011). Evaluation of SMT in localization to under-resourced inflected language. Proceedings of the 15th International Conference of the European Association for Machine Translation, 35–40. https://aclanthology.org/2011.eamt-1.7.pdf

Szarkowska, A., Díaz Cintas, J., & Gerber-Morón, O. (2021). Quality is in the eye of the stakeholders: What do professional subtitlers and viewers think about subtitling? Universal Access in the Information Society, 20, 661–675. https://doi.org/10.1007/s10209-020-00739-2

Toral, A. (2019). Post-editese: An exacerbated translationese. Proceedings of the Machine Translation Summit, 19–23. https://doi.org/10.48550/arXiv.1907.00900

Vieira, P. A., Assis, Í. A. P., & Araújo, V. L. S. (2020). Tradução audiovisual: Estudos sobre a leitura de legendas para surdos e ensurdecidos. Cadernos de Tradução, 40(spe.2), 97–124. https://doi.org/10.5007/2175-7968.2020v40nesp2p97

Volk, M. (2008). The automatic translation of film subtitles: A machine translation success story? Journal for Language Technology and Computational Linguistics, 24(3), 115–128 https://doi.org/10.21248/jlcl.24.2009.124