The

Empathic Communication Analytical Framework (ECAF):

A multimodal perspective on emotional communication

in interpreter-mediated consultations

Laura Theys

KU Leuven, Ghent University, Belgium

laura.theys@kuleuven.be

https://orcid.org/0000-0002-7968-8148

Lise Nuyts

KU Leuven, Belgium

lise.nuyts@student.kuleuven.be

Peter Pype

Ghent University, Belgium

peter.pype@ugent.be

https://orcid.org/0000-0003-2273-0250

Willem Pype

Ghent University, Belgium

willem.pype@ugent.be

Cornelia Wermuth

KU Leuven, Belgium

cornelia.wermuth@kuleuven.be

https://orcid.org/0000-0001-5144-8508

Demi Krystallidou

University of Surrey, UK

d.krystallidou@surrey.ac.uk

https://orcid.org/0000-0002-4821-6383

Abstract

Empathic communication (EC) in healthcare occurs when patients express empathic opportunities, such as emotions, to which doctors respond empathically. This interactional process during which participants try to achieve specific communicative goals (e.g., seeking and displaying empathy) serves as a context in which doctors and patients perform verbal and nonverbal actions and collaboratively co-construct meaning. This applies to interpreter-mediated consultations (IMCs) too, where interpreters perform additional actions of a similar kind. However, there is a dearth of research on the ways in which participants perform these actions in the context of EC, and how these actions in turn help (re)shape the context of EC in IMCs (Theys et al., 2020). To date, any tools for studying EC investigate participants’ actions in isolation, without studying them in the context of EC or in relation to the participants’ awareness of their own and others’ ongoing interactions. In this article, we present the Empathic Communication Analytical Framework (ECAF). The tool draws on valid, complementary analytical tools that allow for a fine-grained, three-level multimodal analysis of interactions. The first level of analysis allows for instances of EC in spoken language IMCs to be identified and for participants’ verbal actions in the context of EC to be studied. The second level allows analysts to investigate participants’ verbal and nonverbal actions in the previously identified context of EC. The third level of analysis links the participants’ concurrent verbal and nonverbal (inter)actions to their levels of attention and awareness and shows how participants’ actions are shaped and in turn help to reshape the context of EC in IMCs. In this article, we present the various levels of the ECAF framework, discuss its application to real-life data, and adopt a critical stance towards its affordances and limitations by looking into one excerpt of EC in IMCs. It is shown that the three distinct yet interconnected levels of analysis in the ECAF framework allow participants’ concurrent multimodal interactions in the context of EC to be studied.

Keywords: healthcare interpreting; multimodal interaction analysis; empathy; nonverbal communication; emotions

1 Introduction

In healthcare settings, doctors are expected to adopt a patient-centered approach where they treat not only the patient’s medical disease but also their illness experience (Stewart et al., 2013). This influences the interactional context in which doctors and patients communicate with each other by means of verbal and nonverbal resources in a dynamic, interactive process to co-construct meaning and achieve shared understanding (Hsieh et al., 2016). During this process, doctors and patients are engaged in multiple concurrent (inter)actions that are aimed at achieving certain communicative goals (e.g., seeking and conveying empathy) (Silverman et al., 2013). The ways in which doctors and patients try to achieve these communicative goals will also have an influence on the interaction (Bensing et al., 2003).

In order to provide person-centered care, doctors are expected to communicate empathically (Hojat, 2016; Stewart et al., 2013). Empathic communication (henceforth EC) entails sequences in an interaction through which patients express empathic opportunities to which doctors express understanding in the form of an empathic response (Hojat, 2016). Patients’ empathic opportunities can be summarized in three main verbal manifestations: emotion (e.g., I’m scared), challenge (e.g., I can’t go to work because of my back pain) or progress (e.g., we got married) (Bylund & Makoul, 2002, 2005). If patients’ need for empathy is met, their health outcomes and doctor–patient satisfaction can improve (Kerasidou, 2020; Yaseen & Foster, 2020).

In the interactional context of EC, participants rely on verbal and nonverbal semiotic resources to achieve their communicative goal of conveying (a need for) empathy (Brugel et al., 2015). These nonverbal semiotic resources can be paralinguistic (e.g., tone of voice, intonation) or kinetic (e.g., gaze, body orientation) (Poyatos, 2002). However, research has focused mainly on verbal EC (Gorawara-Bhat et al., 2017) and has shown that doctors struggle to detect, identify, and empathically respond to patients’ empathic opportunities (Blanch-Hartigan, 2013). Only Gorawara-Bhat et al. (2017) shed more light on the ways in which participants use an interplay of verbal and nonverbal modes in the context of EC and showed that doctors’ verbal actions might be incongruent with their nonverbal actions. As a result, doctors might fail to meet patients’ need for empathy in the local context of EC and might fail to deliver qualitative patient-centered care in the broader context of healthcare practice.

There is an even greater dearth of research on EC and its verbal and nonverbal aspects in interpreter-mediated consultations (henceforth IMCs) (Theys et al., 2020). Various studies analyzed verbal and nonverbal aspects of the interpreter-mediated interaction and showed that interpreters, doctors, and patients verbally and nonverbally attune their actions to each other in order to co-construct meaning in IMCs (Krystallidou, 2014; Krystallidou & Pype, 2018; Pasquandrea, 2011, 2012; Wadensjö, 2001). However, these studies did not focus on the context of EC itself. Only a few studies have honed in on the context of EC in IMCs (Gutierrez et al., 2019; Krystallidou et al., 2020; Merlini & Gatti, 2015) and showed that, similar to monolingual consultations, doctors and interpreters’ verbal actions might fail to meet the patients’ need for empathy at the local level of EC, and fail to deliver patient-centered care within the broader context of healthcare practice.

To date, only two studies have focused on the context of EC in IMCs and studied the verbal and nonverbal aspects of the interaction (Hofer, 2020; Lan, 2019). They concluded that interpreters’ verbal and nonverbal actions might limit patients’ ability to participate in the empathic interaction (Hofer, 2020) and might be perceived as more or less empathic by the other participants (e.g., more empathic: direct gaze; less empathic: averted gaze or body orientation) (Lan, 2019). However, these studies did not link participants’ actions to their awareness of their own and each other’s ongoing (inter)actions. Therefore, more research is needed to explore how the complex interactional phenomenon of EC takes place in IMCs.

As shown by the existing dearth of research, an effective tool is lacking with which to study verbal and nonverbal aspects of the interpreter-mediated interaction in the context of EC (Theys et al., 2020). The aim of this study is to present the Empathic Communication Analytical Framework (ECAF) for IMCs, which was developed by Theys and Krystallidou in response to this need. The framework enables the ways in which the context of EC in IMCs shapes and is reshaped by interpreters, doctors, and patients’ (attention to) concurrent verbal and nonverbal (inter)actions to be studied. In addition, we aim to discuss the ways in which the framework can be applied and we adopt a critical stance towards its affordances and limitations by investigating one excerpt of EC in an authentic IMC. For reasons of space, we focus on one form of EC centered on emotions.

2 Methodology

2.1 Empathic Communication Analytical Framework for IMCs

The Empathic Communication Analytical Framework (henceforth ECAF framework) allows for a fine-grained, multimodal interaction analysis of participants’ verbal and nonverbal actions in the context of EC in spoken language IMCs. It draws on existing analytical frameworks and combines them into a three-level analysis (Table 1). Each level of analysis considers participants’ actions in the empathic interaction from a different perspective and provides complementary results that ultimately provide insight into the ways participants’ (inter)actions take shape and are shaped in the context of EC.

We made the methodological decision to use the Empathic Communication Coding System (henceforth ECCS coding system) in the level 1 analysis as a means of identifying instances of EC in interpreter-mediated interactions. As a result, the analysis using the ECAF framework begins with an investigation of the participants’ verbal interaction, after which the level 2 and 3 analysis give more insight into the participants’ nonverbal interaction in the context of EC in IMCs.

Table 1: Empathic Communication Analytical Framework (ECAF)

|

|

Aspects of interaction studied |

Analytical framework drawn upon |

Aspects of EC identified |

|

Level 1 |

Verbal interaction |

ECCS coding system (Bylund & Makoul, 2002, 2005) as adapted for IMCs (Krystallidou et al., 2018) |

Participants’ verbal actions in the context of EC |

|

Level 2 |

Verbal and nonverbal interaction |

A.R.T. framework (Krystallidou, 2016) |

Participants’ verbal and nonverbal actions and the way they relate to each other in interactions in the context of EC |

|

Level 3 |

Semiotic density of verbal and nonverbal interaction |

Modal density foreground–background continuum (Norris, 2004, 2006) as used in IMCs (Krystallidou, 2014) |

Participants’ levels of awareness of/attention to their own and others’ actions in the context of EC |

2.1.1 Level 1: Participants’ verbal actions in the context of EC

The aim of the level 1 analysis is to identify instances of EC where patients express empathic opportunities and doctors respond to these opportunities through an interpreter. In addition, this level of analysis will give insights into participants’ verbal actions as they are shaped and in turn reshape the context of EC.

The level 1 analysis draws on the ECCS coding system, which is a valid instrument for measuring EC in monolingual consultations (Bylund & Makoul, 2002, 2005) and was adapted for IMCs (Krystallidou et al., 2018). It views EC as a transactional sequential process consisting of verbally patient-expressed empathic opportunities (EOs) expressed by patients, doctors’ empathic verbal responses to those opportunities (Bylund & Makoul, 2005), and interpreters’ renditions of those statements (Krystallidou et al., 2018). The tool distinguishes between three different types of EOs (emotion, challenge and progress) that are verbally expressed in a clear and explicit manner (Bylund & Makoul, 2002, 2005). Emotion is defined as “an affective state of consciousness in which joy, sorrow, fear, hate, or the like, is experienced” (e.g., I am scared). Challenge is a “negative effect a physical or psychosocial problem is having on the patient’s quality of life, or a recent, devastating, life-changing event” (e.g., I can’t work because of my back pain). Progress is a “positive development in physical condition that has improved quality of life, a positive development in the psychosocial aspect of the patient’s life, or a recent, very positive, life-changing event” (e.g., we got married) (Bylund & Makoul, 2002, 2005). In response to these EOs, doctors can express an empathic response that ranges from level 0 (doctor’s denial of the patient’s perspective) to Level 6 (doctor and patient share a feeling or an experience) (Bylund & Makoul, 2002, 2005).

The ECCS coding procedure as adapted for IMCs takes into account typical turn-taking during EC in IMCs and allows the meaning of an EO to be coded as it reaches the doctor and not as it was intended by the patient (Table 2) (Krystallidou et al., 2018). First, the interpreters’ rendition of the patient’s EO in Dutch is coded (1), then the doctors’ ER in Dutch (2), subsequently the patient’s original EO in their native language (3) and, finally, the interpreters’ rendition of the doctor’s ER in the patient’s native language (4). As a final step, the patient’s original EO is compared to the interpreter’s rendition (5) to identify and categorize shifts in meaning and/or intensity.

Table 2: ECCS coding procedure for IMCs

|

Typical turn-taking during EC in IMCs |

ECCS coding procedure |

|||

|

Patient expresses EO in their native language |

(3) |

Coding patient’s original EO |

(5) |

Coding and categorizing shifts in intensity and/or meaning between versions of EOs (1) and (2) |

|

Interpreter renders patient’s EO into Dutch |

(1) |

Coding interpreter’s rendition of patient’s EO |

||

|

Doctor expresses level 0–6 empathic response in Dutch |

(2) |

Coding doctor’s ER to patient’s EO |

|

|

|

Interpreter renders doctor’s level 0–6 empathic response into the patient’s native language |

(4) |

Coding interpreter’s rendition of doctor’s ER |

||

2.1.2 Level 2: Participants’ verbal and nonverbal actions and the ways they relate to each other in interactions in the context of EC

The level 1 coding serves as the context for the level 2 analysis. The aim of the level 2 analysis is to gain insights into the ways in which participants verbally and nonverbally relate to each other and engage in each other’s concurrent (inter)actions.

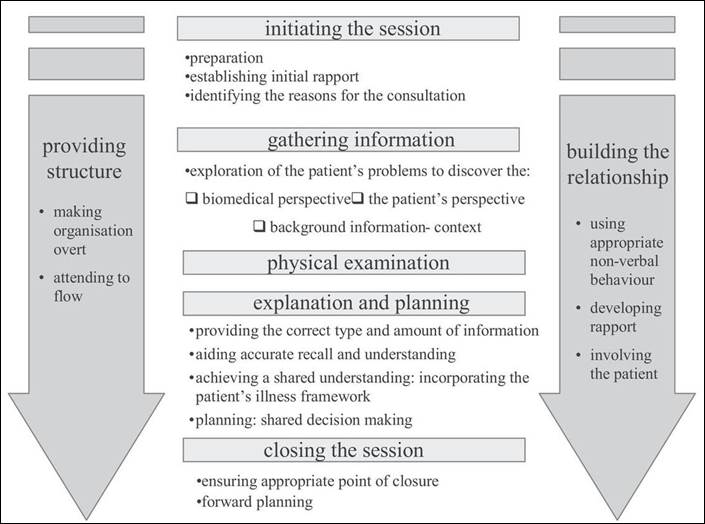

The first step in the level 2 analysis is to identify the phase of the consultation during which the previously identified context of EC took place using the enhanced Calgary-Cambridge guide to the medical interview (Silverman et al., 2013) (Figure 1). This step allows analysts to identify any correlations between participants’ observed behavior and their communicative goals (e.g., Figure 1, stage of the consultation: initiating the session, communicative goals: establishing rapport with the patient).

Figure 1: Enhanced Calgary-Cambridge guide to the medical interview (Silverman et al., 2013)

The level 2 analysis draws on the A(ctions) and R(atification) component of the A.R.T. Framework (Krystallidou, 2016). The framework enables the study of participants’ use of verbal and nonverbal semiotic resources to perform concurrent (inter)actions (actions) and to relate to each other (ratification) in the context of EC.

Analysts can identify and annotate participants’ ratification by investigating the ways in which participants address each other in their interactions by means of verbal and nonverbal semiotic resources. In medical consultations, speakers are able to address the recipient of their message by means of speech (verbal ratification), gaze (visual ratification), or a combination of speech and gaze (full ratification). Yet, in IMCs, a speaker can ratify two participants simultaneously, one verbally and one by means of gaze (split ratification). In addition, doctors and patients usually pseudo-ratify each other verbally as they have no command of each other’s language but design their messages for each other (Krystallidou, 2016).

In addition to ratification between speakers and listeners, participants can engage in each other’s concurrent (inter)actions by means of gaze and/or body orientation. A participant can express engagement with another participant’s (inter)actions by means of gaze. When two participants gaze at each other, this is called an ‘engagement framework’. A participant can also use body orientation to include or exclude themself or others from participation in an interaction. When two participants’ bodies are mutually aligned, this is called a ‘participation framework’. Finally, participants can align their gaze and body orientation to participate and engage with each other, which is called a ‘participation and engagement framework’ (Goffman, 1981; Goodwin, 1981; Krystallidou, 2014, 2016).

2.1.3 Level 3: Participants’ levels of awareness of/attention to their own and others’ actions in the context of EC

Whereas the level 2 analysis provides an overview of the ways in which participants use verbal and nonverbal resources to relate to each other and engage in concurrent (inter)actions, the level 3 analysis links the level 1 and 2 coding of participants’ verbal and nonverbal actions to participants’ attention levels. This perspective was not investigated during the level 2 analysis. In doing so, analysts can gain insights into the ways in which participants’ (inter)actions are attuned to each other, to the context of EC, and how this in turn can influence the context of EC.

The level 3 analysis draws on Norris’ modal density foreground–background continuum (2004, 2006). A key idea in the framework is that participants’ actions can be broken down into interdependent modes, i.e., the semiotic resources participants use in their interaction (e.g., speech, gaze and body orientation) that can be defined according to the analyst’s focus. Modal density then refers to the (intensity of the) interplay of modes that a participant uses to perform a certain (inter)action in the context of EC. The level of modal density indicates participants’ levels of attention to or awareness of a certain (inter)action (Norris, 2004, 2006). In other words, the higher the modal density in a participant’s action, the more in the foreground that action is placed in that participant’s consciousness. In doing so, multiple ongoing (inter)actions can be placed on a foreground–background continuum of a participant’s consciousness. This allows analysts to identify participants’ attention levels for concurrent (inter)actions that took shape in and could in turn reshape the context of EC in IMCs.

At the level 3 analysis, coding starts with identifying the modal density of participants’ actions by investigating the semiotic resources (modes) participants used in the context of EC, as identified in levels 1 and 2. Each participant’s action(s) is marked by a low, medium, or high modal density, depending on the number of semiotic resources participants used while performing that action and also on the weight that analysts attach a priori to each semiotic resource. Clearly, the latter is a methodological decision based on the analyst’s needs and interests and does not reflect any value judgement on a specific type of semiotic resource.

Next, the analysts can place concurrent (inter)actions on the foreground–background continuum based on their coding of modal density. The higher the modal density, the more in the foreground the action should be placed. Given that participants can perform multiple concurrent (inter)actions, each action could be marked by a different modal density and consequently could be placed on a different place on the foreground–background continuum.

2.2 Application of ECAF framework to a corpus of authentic IMCs

In what follows we discuss the way in which we applied the ECAF framework to our data. For reasons of space, we present only one excerpt of EC where the patient expresses an emotion, which the interpreter then renders and to which the doctor responds. This excerpt is taken from our dataset of seven real-life IMCs and is representative of all the identified excerpts in our dataset where the patient introduced an emotion EO. We do not claim that the findings based on this excerpt apply to EC across the board. To ascertain whether this will be the case, further research on a larger scale is required.

2.2.1 Data collection

The seven consultations that we analyzed are part of the EmpathicCare4All corpus that we collected in a large, urban hospital in Flanders, Belgium. The participants and the sample size were defined by the language combination and the number of confirmed interpreter bookings at the hospital where the data were collected. One camera was placed behind the patient and the interpreter and another one was installed behind the doctor. None of the researchers was present in the consultation room. The study was approved by the hospital ethics committee (Belgian registration number: B322201835332). All the participants were blinded to the research questions. The participants’ written informed consent was obtained before their inclusion in the study via informed consent forms that were translated by professional translators in their native language.

The consultations were held in Dutch (the doctor’s native language), Russian, Turkish, standard Arabic, and Polish (the patients’ native languages for which interpretation was mostly required at the hospital at the time of the data collection). The consultations were held at the following departments: gynecology, endocrinology, cardiology, rheumatology, and ear, nose and throat (ENT). All the participants except one (the Polish patient) had had previous experience with IMCs. The professional interpreters were trained and certified by an independent translation and interpreting agency that is funded by the Flemish government. The interpreters were hired by the hospital on a freelance basis. The patients reported that their language proficiency in Dutch was very limited or basic. None of the doctors had any command of the patient’s native language.

2.2.2 Data processing

Professional translators (native speakers of Russian or Turkish) transcribed and translated the Russian or Turkish parts and LT, as a native Dutch speaker, transcribed the Dutch parts. One transcript was produced for each consultation. All the translators were trained in transcribing and instructed by LT and DK to flag culture-specific issues (e.g., aversion of gaze as a sign of emotional discomfort) (Lorié et al., 2017). Native speakers of the patients’ languages with expertise in the above languages verified the quality of the translations. The translators and proof-readers’ comments were included in the analysis.

2.2.3 Data coding

During the level 1 analysis, the verbal transcripts were used to identify instances of EO with emotion EOs. Across each level of analysis, the video recordings were consulted multiple times to clarify aspects of the transcripts (e.g., participants’ nonverbal behavior).

At the level 2 analysis, we focused on participants’ use of gaze and body orientation, besides speech, because both these resources have been shown to play an important role in clinical empathy (Brugel et al., 2015). In addition, we established the emotion that was expressed in every identified instance of EC using the Medical Subject Headings database (MeSH) (National Library of Medicine). This allowed us to explore whether any recurring patterns in the participants’ observed behavior were associated with the type of expressed emotion (e.g., the patient’s gaze was continuously averted when verbally expressing an emotion of fear).

At the level 3 analysis, we took speech as the primary mode of communication and assigned double value when coding the modal density of participants’ (inter)actions, similarly to Norris (2006). This decision was made based on our use of the ECCS coding system as a means of identifying the context of EC in their verbal inaction in the level 1 analysis (Bylund & Makoul, 2002, 2005). All other modes were assigned a single value. For example, action performed by means of gaze and body was accorded a value of 2, just as an action performed by means of speech would.

The level 1 analysis was carried out by two pairs of coders (pair 1: LT & external coder, pair 2: LT & CW). The level 2 and 3 analysis were carried out by LT and LN. They worked independently and consensus was reached through discussion. All the coders were trained by DK. The final coding was checked by PP for clinical relevance.

3 Results

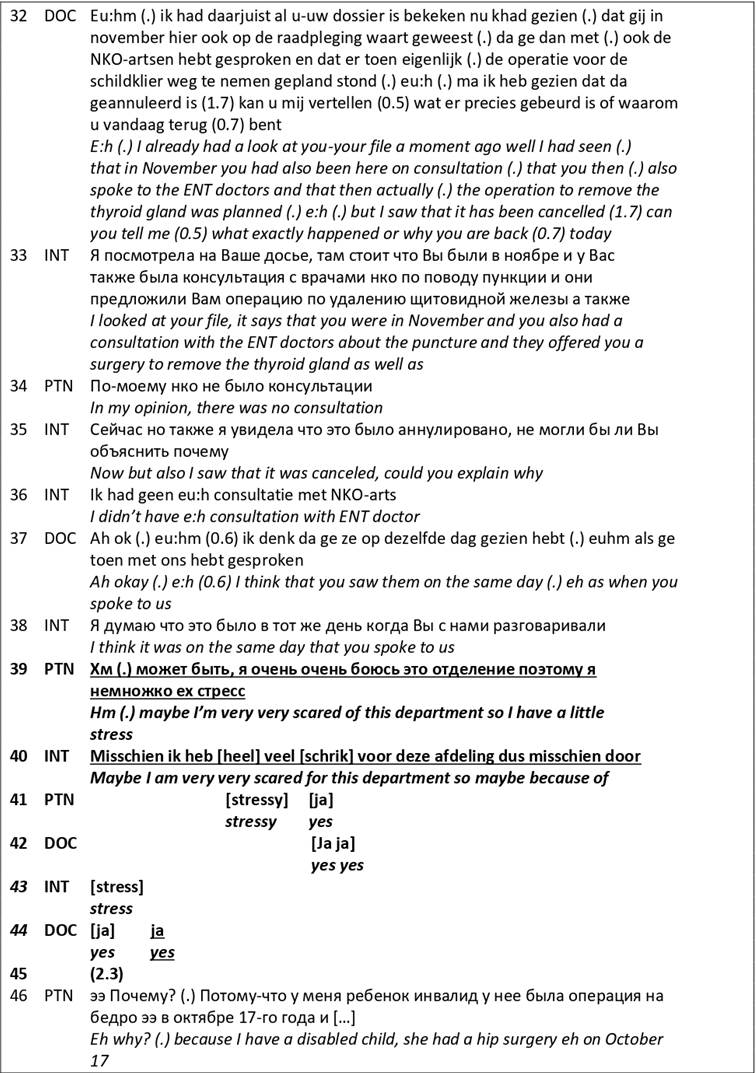

The excerpt where an emotion EO was expressed, rendered, and responded to is reproduced below. The interaction took place during a consultation between a doctor from the endocrinology department (DOC), a Russian-speaking Chechen patient (PTN), and a professional Dutch–Russian interpreter (INT). The reason for the encounter was that the patient had been diagnosed previously with thyroid cancer and surgery was planned to remove the thyroid gland. After that consultation, the patient cancelled the surgery. In the recorded consultation, the communication was centered on clarifying the patient’s reasons for cancelling the surgery, re-explaining the patient’s cancer diagnosis, and rescheduling the surgery.

3.1 Level 1: Participants’ verbal actions in the context of EC

We used symbols from the Jefferson Transcription System in our transcripts (Jefferson, 1984) (see annex). The translation of the participants’ utterances into English is provided in italics. Overlapping utterances are placed between brackets and below each other. The patient’s emotion EO, its rendition by the interpreter, and the doctor’s response to this EO are in bold and underlined.

Table 3: Example of EC with patient-expressed emotion EO

In Table 3 (line 32), the doctor opens the conversation with a twofold question about the patient’s reasons for cancelling the surgery and why she wanted to see the doctor. The interpreter translates the doctor’s statement for the patient (line 33) but before she can finish her rendition, the patient intervenes and states that she did not have a prior consultation (line 34). The interpreter does not immediately render the patient’s response but first finishes her interpretation of the doctor’s statement (line 35) and then renders the patient’s statement to the doctor (line 36). The doctor takes the next turn and states that she thinks that the patient saw the ENT doctors after she had seen them the last time (line 37). The interpreter’s rendition of the doctor’s turn to the patient (line 38) is followed up by the patient’s expression of an emotion EO stating that she is very scared of the doctor’s department and stressed (line 39). This utterance is an explicit statement reflecting the patient’s affective state during the consultation, hence our coding of an emotion EO. The patient’s emotion EO conveyed a certain need for empathy and invited the doctor to respond empathically to her emotion. This created a context of EC in their interaction. The interpreter renders the emotion EO in line (40) but paraphrases it and replaces the patient’s choice of words, ‘so I have a little stress’ (line 39) by, ‘because of stress’ (line 40). Even though the patient’s choice of words conveyed the patient’s affective state, the interpreter’s choice of words suggest that the patient is explaining why she previously denied having seen the ENT doctors. In doing so, the interpreter explicitly conveyed why the patient expressed an emotion EO, even though this remained implicit in the patient’s utterance. In this way, the interpreter might have changed the meaning of the patient’s utterance. In parallel to the interpreter’s rendition, the patient utters again the word ‘stressy’ in Dutch and a ‘yes’ continuer (line 41). The doctor also utters a few ‘yes’ continuers that overlap with the interpreter’s words and expresses one final ‘yes’ after the interpretation in response to the patient’s emotion EO (line 44). After the doctor’s final ‘yes’, there is a silence of a few seconds (line 45), during which the doctor does not elaborate her response to the expressed EO and the interpreter does not render the doctor’s response. Instead, the patient picks up the second part of the doctor’s initial question and starts explaining why she cancelled the surgery (line 46). With her response to the patient’s emotion EO (‘yes’, line 44), the doctor gives an automatic, scripted-type response, giving the empathic opportunity minimal recognition (Level 1 ECCS, see Annex).

The level 1 analysis allowed us to identify a context of EC in the conversation about the patient’s reasons for cancelling the surgery and seeing the doctor. Our coding shows that, besides the patient’s overarching communicative goal of answering the doctor’s questions, the patient uttered an emotion EO and seemed to convey a need for empathy. However, the interpreter changed the patient’s emotion EO into a statement about stress being the reason for the patient denying having a previous consultation at ENT. In doing so, the interpreter’s verbal action downplayed the patient’s expressed need for empathy in her rendition that reached the doctor. This in turn might have limited the doctor’s perception of the patient’s experienced emotion and need for empathy. As a result, it is possible that the doctor expressed continuers that only minimally recognized the patient’s expressed emotion. In short, the doctor’s response to the patient’s expressed EO might have failed to meet the patient’s need for empathy as a result of the interpreter’s verbal action that changed the meaning of the patient’s original verbal statement. This in turn could mean that the interpreter and the doctor’s verbal actions were not adequately attuned to the patient’s expressed need for empathy, and that could have a negative effect on the context of EC.

3.2 Level 2: Participants’ verbal and nonverbal actions and how they relate to each other in interaction in the context of EC

The results of the level 1 analysis served as the context in which we looked into participants’ nonverbal concurrent (inter)actions when the patient expressed the emotion EO and the doctor responded to it through an interpreter.

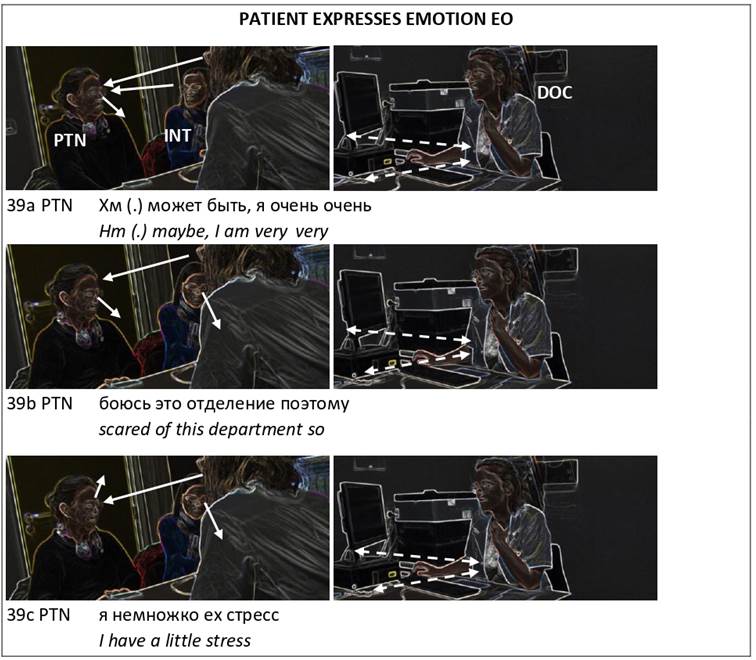

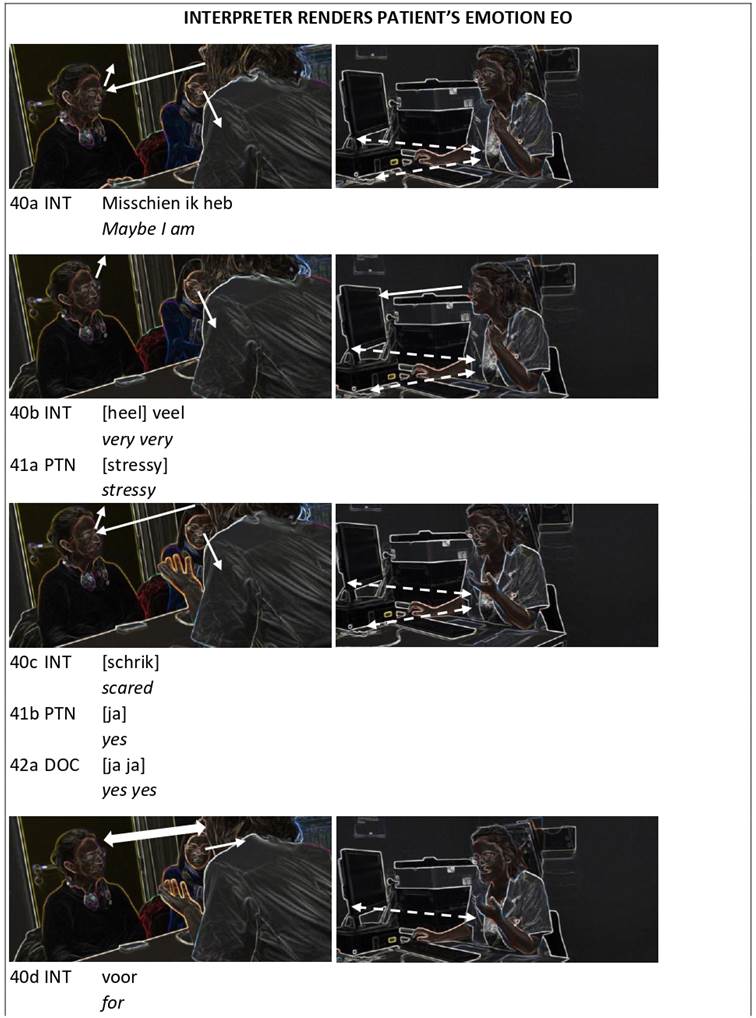

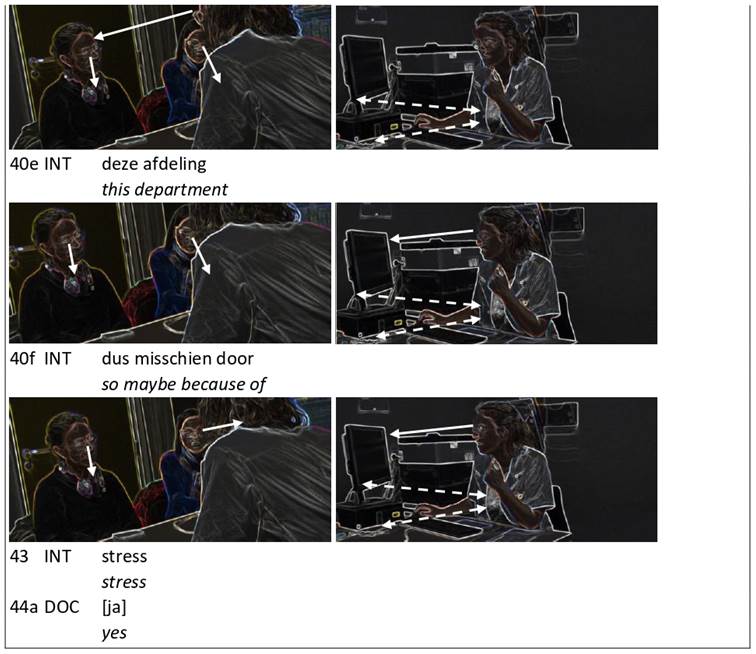

The video stills below show each shift in a participant’s gaze and/or body orientation. The direction of gaze is marked with a single full arrow and an engagement framework (mutual gaze) with a double full arrow. Body orientation is indicated with a single dotted arrow and a participation framework (participants’ mutually aligned body orientation) with a double dotted arrow. A participation and engagement framework is indicated with a large double arrow.

Figure 2: Example of participants’ actions and ratification process in EC when patient expresses emotion EO

In Figure 2, when the patient uttered her emotion, she verbally ratified the interpreter and verbally pseudo-ratified the doctor. She did not respond to the doctor (39a–c) or to the interpreter’s patient-directed gaze (39a) which signaled engagement in the patient’s verbal action of expressing an emotion EO. Instead, the patient only visually excluded herself from the interaction by averting her gaze from the other participants (looking down, 39a–b, and then up, 39c).

Figure 3: Example of participants’ actions and ratification process in EC when interpreter renders patient’s emotion EO

When the interpreter rendered the patient’s emotion EO and changed its meaning, the interpreter verbally ratified the doctor and fully ratified her twice by shifting her gaze from her notes to the doctor. The first time the interpreter glanced at the doctor was after the latter had expressed a few continuers (Figure 3, 40c–d). The second time, the interpreter gazed at the doctor was at the end of her turn, selecting the doctor as the next speaker (Figure 3, 43). Neither of these doctor-directed glances were responded to as the doctor gazed at the patient instead, trying to engage with her (Figure 3, 40a & 40c–e). Only twice did the doctor glance at her computer: once at the start of the interpreter’s rendition and once near the end of it (Figure 3, 40b & 40f-43). Overall, the doctor’s patient-directed gaze and associated expression of engagement was not reciprocated by the patient. Only once, after the patient verbally uttered the words ‘stressy’ and ‘yes’, did the patient respond briefly to the doctor’s gaze, creating a participation and engagement framework between them (Figure 3, 40d). Briefly after that, the patient broke off the participation and engagement framework with the doctor by averting her gaze downwards and visually excluding herself from the interaction again (Figure 3, 40e–43).

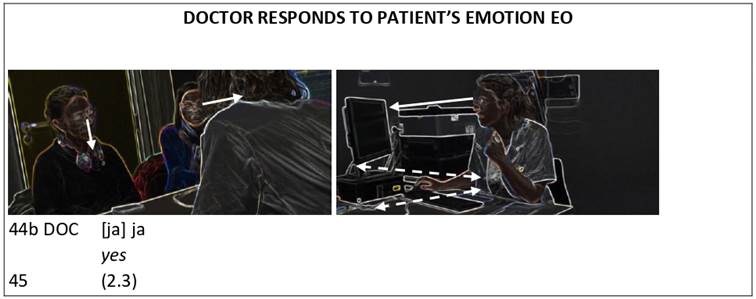

Figure 4: Example of participants’ actions and ratification process in EC when doctor responds to patient’s emotion EO and interpreter omits doctor’s empathic response

In Figure 4, when the doctor responded to the patient’s emotion EO and minimally recognized the patient’s emotion verbally, the doctor verbally ratified the interpreter and verbally pseudo-ratified the patient. The doctor did not respond to the interpreter’s doctor-directed gaze which expressed engagement in the doctor’s verbal action. Instead, the doctor focused her gaze on the computer. Meanwhile, the patient averted her gaze from the other participants, visually excluding herself from the interaction and displaying a lack of engagement in the doctor’s response.

Throughout the context of EC, the doctor was continuously in a participation framework with the patient and the interpreter by means of body orientation (Figures 2–4). In contrast, the patient and the interpreter did not orient their bodies towards each other, meaning that they signaled unavailability to interact with each other.

The level 1 analysis showed that, at a verbal level, the doctor’s actions might not have been adequately attuned to the patient’s expressed need for empathy (Table 3). Now, the level 2 analysis shows that the doctor, and to a lesser extent the interpreter, signaled engagement in the patient’s expression of emotion by means of a patient-directed gaze (Figure 2). The doctor also continuously participated in interaction with the patient by means of body orientation and kept engaging with the patient by means of gaze during the interpreter’s rendition (Figures 2–3). In other words, the interpreter and the doctor’s nonverbal actions were more attuned to the context of EC than their verbal actions appeared to be.

The level 2 analysis also highlighted a contradiction in the patient’s verbal and nonverbal actions: the patient verbally conveyed an emotion, signaling a need for empathy, but nonverbally did not respond to the interpreter and doctor’s signals of engagement expressed by means of their patient-directed gaze (Figures 2–3). In other words, the patient’s unresponsiveness to the doctor and interpreter’s signal of engagement suggested that the patient’s need for empathy might not have been as pressing as expressed in her verbal action. The incongruent message that emerged from the patient’s verbal and nonverbal actions could also explain why the doctor might have only minimally recognized the patient’s verbally expressed emotion EO.

The level 2 analysis also showed that the interpreter and the doctor gazed at their notes or the computer while they verbally rendered or responded to the patient’s emotion EO (Figures 3–4). This suggested that they were involved in multiple concurrent (inter)actions. To gain a better understanding of how the interpreter and the doctor divided their attention between these (inter)actions and how this could affect the context of EC, we performed a level 3 analysis.

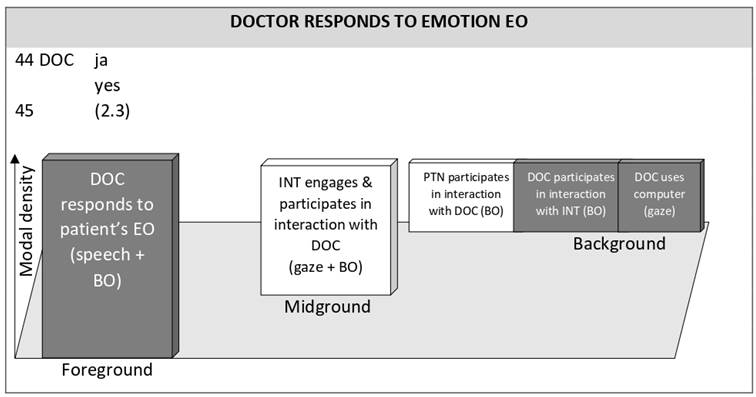

3.3 Level 3: Participants’ levels of awareness of/attention to their own and others’ actions in the context of EC

Participants’ concurrent (inter)actions are displayed in the foreground–background continuum below. The speaker’s actions appear in a dark grey box. The modes participants used are placed between brackets. Body orientation is abbreviated as ‘BO’.

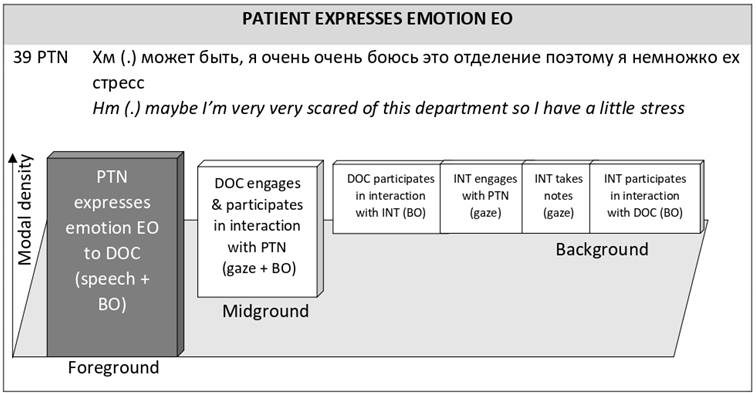

Figure 5: Modal density foreground–background continuum for EC when patient expresses emotion EO

In Figure 5, when the patient expressed an emotion EO in the context of EC, the patient’s use of speech and body orientation suggests that the patient focused her attention solely on her interaction with the doctor. Meanwhile, the interpreter divided her attention between note-taking, engaging with the patient by means of gaze, and participating in the interaction with the doctor by means of body orientation. The doctor also divided her attention between her concurrent interactions with the patient and the interpreter by means of body orientation. However, the doctor was more attentive to the doctor–patient interaction as both her gaze and her body orientation were directed at the patient.

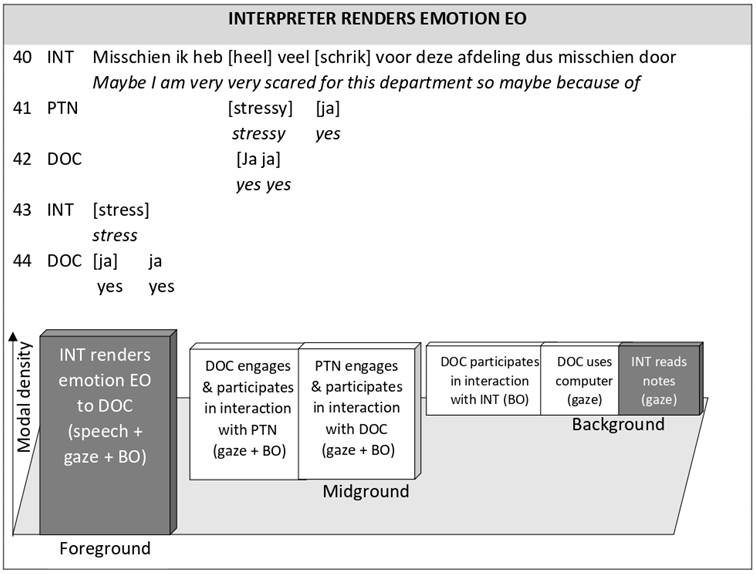

Figure 6: Modal density foreground–background continuum for EC when interpreter renders patient’s emotion EO

In Figure 6, when the interpreter rendered the patient’s EO in the context of EC, the interpreter again paid attention to her notes, by means of gaze, and focused most of her attention on her interaction with the doctor, by means of speech, gaze, and body orientation. Meanwhile, the doctor divided her attention between the doctor–patient interaction, the doctor–interpreter interaction and her use of the computer. Her attention was mostly focused on the doctor–patient interaction by means of gaze and body orientation, and less so on the doctor–interpreter interaction or the use of the computer. During this time, the patient’s attention to the doctor–patient interaction decreased as she no longer used speech to address the doctor.

Figure 7: Modal density foreground–background continuum for EC doctor responds to patient’s emotion EO and interpreter omits doctor’s empathic response

In Figure 7, when the doctor responded to the patient’s EO in the context of EC, the doctor’s attention levels for their concurrent (inter)actions did not change. The doctor focused most of her attention on her interaction with the patient by means of speech and body orientation, while also paying some attention to the doctor–interpreter interaction and her use of the computer. Meanwhile, the interpreter completely focused all her attention on the doctor by means of gaze and body orientation. The patient’s attention to the doctor–patient interaction decreased even more as she no longer used gaze to address the doctor and participated in interaction with the doctor only through body orientation.

The level 2 analysis showed that the interpreter and doctor’s nonverbal actions signaled engagement towards the patient while performing other (inter)actions. The patient, however, seemed rather unresponsive to these signals (Figures 2–4). The level 3 analysis now shows that, throughout the context of EC, the doctor was more attentive to her interactions with the patient than to her use of the computer or her interaction with interpreter (Figures 5–7). This insight supports the level 2 finding that the doctor was nonverbally more attuned to the patient’s expressed need for empathy and prioritized the doctor–patient interaction over her communicative goal of capturing information.

The level 3 analysis also showed that, throughout the context of EC, doctor and patient paid more attention to each other than to the interpreter (Figures 5–7). The doctor and the patient’s mutual attention to each other suggest that they were aligned with each other and prioritized their communicative goal of establishing an interpersonal relationship in the context of EC. Meanwhile, the interpreter’s attention levels suggest that she was more aligned to the doctor than to the patient (Figures 6–7).

4 Discussion and conclusion

4.1 Discussion

In this article, we presented the ECAF framework as a tool that was specifically developed for the study of participants’ multimodal interactions in the context of EC in IMCs. Our analysis of one excerpt of EC pointed out some of the affordances of the tool and provided some promising pathways for future enquiries.

The ECAF framework responds to the need for an analytical tool that allows researchers to conduct an in-depth multimodal analysis of the verbal and nonverbal aspects of participants’ (inter)actions in the context of EC in IMCs (Theys et al., 2020). The results that emerged from performing such a multimodal analysis of an instance of EC by means of the ECAF framework seem promising. Similarly, to the findings of previous studies (Hofer, 2020; Krystallidou et al., 2020; Krystallidou et al., 2018), we identified that the interpreter’s verbal rendition of the patient’s emotion EO and the doctor’s verbal empathic response to that EO might be insufficiently attuned to the patient’s verbally expressed empathic opportunity in the context of EC (see section 3.1).

Whereas previous studies did not provide evidence of other concurrent interactions beyond those on the level of verbal interaction, our analysis has shown that participants engage in a wide range of actions by means of nonverbal semiotic resources, such as gaze and body orientation, in the context of EC. The interpreter and doctor engaged in the patient’s expression of an emotion by means of gaze, whereas the patient was unresponsive to these expressions of engagement and excluded herself visually from the interaction instead (see section 3.2). The patient’s tendency to avert her gaze could have been a sign of her discomfort (Adams & Kleck, 2005), which reinforced her expressed need for empathy. The doctor and interpreter’s patient-directed gaze can convey a sense of understanding (Vranjes et al., 2019) and help to respond to this need for empathy (Brugel et al., 2015; Lan, 2019). On the other hand, the patient’s unresponsiveness to the interpreter and doctor’s gaze could have limited her perception of the others’ nonverbally expressed empathy, possibly resulting in the patient’s feeling unacknowledged or misunderstood in her lived experience.

These results seem to confirm that the context of EC might be compromised in IMCs by the interpreter and doctor’s verbal actions (Hofer, 2020; Krystallidou et al., 2020; Theys et al., 2020). But they also appear to show that the patient’s actions might have a similar effect on the co-construction of EC. Moreover, our results suggest that the interpreter and doctor’s use of gaze and body orientation might be better attuned to the patient’s expressed need for empathy in the context of EC and more patient-centered care than their verbal practice. More multimodal research is needed to explore these preliminary findings.

The ECAF framework also enables researchers to use a set of different lenses to study participants’ (inter)actions in the context of EC. The benefits of this approach become apparent from our analysis of one excerpt where each level of analysis provided a unique set of results. When viewed together, these results provide a much more comprehensive view of the interactional processes than single-layered analysis of interaction have done in the past. Equally important is the interconnectedness between the three levels of analysis (i.e., level 1 provides the context of EC for the level 2 analysis of the participants’ interaction, while level 3 links findings from the participants’ levels of attention levels 1 and 2). More specifically, level 1 showed that the interpreter changed the meaning of the patient’s expressed EO, which could affect the doctor’s understanding of the patient’s expressed emotional EO (see section 3.1). In level 2, it became apparent that the doctor continuously tried to engage with the patient by means of gaze and body orientation (see section 3.2), although their verbal interaction at that point seemed to suggest the opposite. The doctor’s patient-directed gaze and body orientation could have served as a monitoring mechanism (Krystallidou, 2020), where the doctor relied on additional nonverbal input from the patient to gain a better understanding of the patient’s experienced emotion (Bensing et al., 1995) and to identify any discrepancies between the interpreter and patient’s input (Brugel et al., 2015; Gorawara-Bhat et al., 2017). The level 3 analysis showed that the patient and the doctor focused most of their attention on their interaction (see section 3.3). These high levels of attention could create the conditions for an empathic doctor–patient relationship (Hojat, 2016). When viewed together, these results suggest that the doctor and the patient might have been able to understand each other’s (need for) empathy, despite the interpreter’s action that could have jeopardized this understanding.

More research is needed to verify this finding, but in the meantime it was shown that the combination of different insights into the participants’ interactions in the context of EC in IMCs can lead to revealing insights. These insights could make a fresh evidence-based contribution to the education of interpreters and healthcare professionals.

4.2 Limitations

As for now, the ECAF framework is not suitable for the study of EC in IMCs with sign language interpreters due to the developers’ lack of proficiency in sign language. Fellow scholars in sign language interpreting may wish to adapt the ECAF framework for the study of EC in sign language IMCs. The results in this study are not generalizable to EC in IMCs across the board and their validity should be tested in future research. The ECAF framework allows researchers to work in groups of coders, as we did in this study (see section 2.2.3). This gives future researchers the opportunity to divide the workload associated with the three-level analysis so that larger corpora or longer stretches of interaction can be analyzed. The ECAF framework does not allow for the study of participants’ perceptions and experiences. The analysis of observed behavior is subject to the analyst’s interpretation. The presence of cameras might have affected participants’ embodied behavior in the included excerpt.

4.3 Conclusion

The ECAF framework is a valid tool that enables the comprehensive and fine-grained micro-analysis of participants’ observed behavior in the context of EC in IMCs. The tool allows analysts to observe how the context of EC shapes and is reshaped by participants’ (attention to their) own and others’ (inter)actions. Its three distinct, yet interconnected levels of analysis allow researchers to explore largely under-investigated aspects of participants’ nonverbal (inter)actions in the context of EC in IMCs. Our analysis of one excerpt of EC in an authentic IMCs serves as a promising example of the relevant results that can be generated by means of the ECAF framework.

4.4 Implications

The ECAF framework can be used to study a variety of verbal and nonverbal aspects of interpreter-mediated interactions in the context of EC, according to the scope of the researchers’ study. The results that emerge by means of the ECAF framework can be used in the (interprofessional) education of interpreters and healthcare professionals can help them to learn how to adjust their verbal and nonverbal practices to each other’s communicative goals in the context of EC.

5 Acknowledgements

We thank the participants for allowing us to record and scrutinize their interactions and the translators and transcribers for their contributions.

6 References

Adams, R. B., & Kleck, R. E. (2005). Effects of direct and averted gaze on the perception of facially communicated emotion. Emotion, 5(1), 3–11. https://doi.org/10.1037/1528-3542.5.1.3

Bensing, J., Van Dulmen, S., & Tates, K. (2003). Communication in context: New directions in communication research. Patient Educ Couns, 50(1), 27–32. https://doi.org/10.1016/s0738-3991(03)00076-4

Bensing, J. M., Kerssens, J. J., & van der Pasch, M. (1995). Patient-directed gaze as a tool for discovering and handling psychosocial problems in general practice. Journal of Nonverbal Behavior, 19(4), 223–242. https://doi.org/10.1007/BF02173082

Blanch-Hartigan, D. (2013). Patient satisfaction with physician errors in detecting and identifying patient emotion cues. Patient Educ Couns, 93(1), 56–62. https://doi.org/10.1016/j.pec.2013.04.010

Brugel, S., Postma-Nilsenová, M., & Tates, K. (2015). The link between perception of clinical empathy and nonverbal behavior: The effect of a doctor’s gaze and body orientation. Patient Educ Couns, 98(10), 1260–1265. https://doi.org/10.1016/j.pec.2015.08.007

Bylund, C. L., & Makoul, G. (2002). Empathic communication and gender in the physician–patient encounter. Patient Educ Couns, 48(3), 207–216. https://doi.org/10.1016/s0738-3991(02)00173-8

Bylund, C. L., & Makoul, G. (2005). Examining empathy in medical encounters: An observational study using the Empathic Communication Coding System. Health Commun, 18(2), 123–140. https://doi.org/10.1207/s15327027hc1802_2

Goffman, E. (1981). Forms of talk. University of Pennsylvania Press.

Goodwin, C. (1981). Conversational organization: Interaction between speakers and hearers. Academic Press.

Gorawara-Bhat, R., Hafskjold, L., Gulbrandsen, P., & Eide, H. (2017). Exploring physicians’ verbal and nonverbal responses to cues/concerns: Learning from incongruent communication. Patient Educ Couns, 100(11), 1979–1989. https://doi.org/10.1016/j.pec.2017.06.027

Gutierrez, A. M., Statham, E. E., Robinson, J. O., Slashinski, M. J., Scollon, S., Bergstrom, K. L., Street, R. L., Parsons, D. W., Plon, S. E., & McGuire, A. L. (2019). Agents of empathy: How medical interpreters bridge sociocultural gaps in genomic sequencing disclosures with Spanish-speaking families. Patient Educ Couns, 102(5), 895–901. https://doi.org/10.1016/j.pec.2018.12.012

Hofer, G. (2020). Investigating expressions of pain and emotion in authentic interpreted medical consultations: “But I am afraid, you know, that it will get worse”. In I. E. T. de V. Souza & E. Fragkou (Eds.), Handbook of research on medical interpreting (pp. 136–164). IGI Global. https://doi.org/10.4018/978-1-5225-9308-9

Hojat, M. (2016). Empathy in health professions education and patient care. Springer. https://doi.org/10.1007/978-3-319-27625-0

Hsieh, E., Bruscella, J., Zanin, A., & Kramer, E. M. (2016). “It’s not like you need to live 10 or 20 years”: Challenges to patient-centered care in gynecologic oncologist–patient interactions. Qual Health Res, 26(9), 1191–1202. https://doi.org/10.1177/1049732315589095

Jefferson, G. (1984). On the organization of laughter in talk about troubles. In J. M. Atkinson & J. Heritage (Eds.), Structures of social action: Studies in conversation analysis (pp. 346-369). Cambridge University Press. https://doi.org/10.1017/CBO9780511665868.021

Kerasidou, A. (2020). Artificial intelligence and the ongoing need for empathy, compassion and trust in healthcare. Bulletin of the World Health Organization, 98, 245–250. https://doi.org/10.2471/BLT.19.237198

Krystallidou, D. (2014). Gaze and body orientation as an apparatus for patient inclusion into/exclusion from a patient-centred framework of communication. The Interpreter and Translator Trainer, 8(3), 399–417. https://doi.org/10.1080/1750399X.2014.972033

Krystallidou, D. (2016). Investigating the interpreter’s role(s): The A.R.T. framework. Interpreting, 18(2), 172–197. https://doi.org/10.1075/intp.18.2.02kry

Krystallidou, D. (2020). Going video: Understanding interpreter-mediated clinical communication through the video lens. In G. Brône & H. Salaets (Eds.), Linking up with video: Perspectives on interpreting practice and research (Vol. 149, pp. 181–202). John Benjamins. https://doi.org/10.1075/btl.149.08kry

Krystallidou, D., Bylund, C. L., & Pype, P. (2020). The professional interpreter’s effect on empathic communication in medical consultations: a qualitative analysis of interaction. Patient Educ Couns, 103(3), 521–529. https://doi.org/10.1016/j.pec.2019.09.027

Krystallidou, D., & Pype, P. (2018). How interpreters influence patient participation in medical consultations: The confluence of verbal and nonverbal dimensions of interpreter-mediated clinical communication. Patient Educ Couns, 101(10), 1804–1813. https://doi.org/10.1016/j.pec.2018.05.006

Krystallidou, D., Remael, A., de Boe, E., Hendrickx, K., Tsakitzidis, G., van de Geuchte, S., & Pype, P. (2018). Investigating empathy in interpreter-mediated simulated consultations: An explorative study. Patient Educ Couns, 101(1), 33–42. https://doi.org/10.1016/j.pec.2017.07.022

Lan, W. (2019). Crossing the chasm: Embodied empathy in medical interpreter assessment https://repository.hkbu.edu.hk/etd_oa/674

Lorié, Á., Reinero, D. A., Phillips, M., Zhang, L., & Riess, H. (2017). Culture and nonverbal expressions of empathy in clinical settings: A systematic review. Patient Educ Couns, 100(3), 411–424. https://doi.org/10.1016/j.pec.2016.09.018

Merlini, R., & Gatti, M. (2015). Empathy in healthcare interpreting: Going beyond the notion of role. The Interpreters’ Newsletter, 20, 139–160. https://doi.org/10077/11857

National Library of Medicine. (n.d.). Medical subject headings https://www.ncbi.nlm.nih.gov/mesh

Norris, S. (2004). Analyzing multimodal interaction: A methodological framework. Routledge. https://doi.org/10.4324/9780203379493

Norris, S. (2006). Multiparty interaction: A multimodal perspective on relevance. Discourse Studies, 8(3), 401–421. https://doi.org/10.1177/1461445606061878

Pasquandrea, S. (2011). Managing multiple actions through multimodality: Doctors’ involvement in interpreter-mediated interactions. Language in Society, 40(4), 455–481. https://doi.org/10.1017/S0047404511000479

Pasquandrea, S. (2012). Co-constructing dyadic sequences in healthcare interpreting: A multimodal account. New Voices in Translation Studies, 8, 132–157. https://www.iatis.org/images/stories/publications/new-voices/Issue8-2012/IPCITI/article-pasquandrea-2012.pdf

Poyatos, F. (2002). Nonverbal communication across disciplines: Volume 1: Culture, sensory interaction, speech, conversation. John Benjamins. https://doi.org/10.1075/z.ncad1

Silverman, J., Kurtz, S., & Draper, J. (2013). Skills for communicating with patients. Radcliff Medical Press. https://doi.org/10.1201/9781910227268

Stewart, M., Brown, J. B., Weston, W., McWhinney, I. R., McWilliam, C. L., & Freeman, T. (2013). Patient-centered medicine: Transforming the clinical method. CRC Press. https://doi.org/10.1201/b20740

Theys, L., Krystallidou, D., Salaets, H., Wermuth, C., & Pype, P. (2020). Emotion work in interpreter-mediated consultations: A systematic literature review. Patient Educ Couns, 103(1), 33–43. https://doi.org/10.1016/j.pec.2019.08.006

Vranjes, J., Bot, H., Feyaerts, K., & Brône, G. (2019). Affiliation in interpreter-mediated therapeutic talk: On the relationship between gaze and head nods. Interpreting, 21(2), 220–244. https://doi.org/10.1075/intp.00028.vra

Wadensjö, C. (2001). Interpreting in crisis: The interpreter's position in therapeutic encounters. In I. Mason (Ed.), Triadic exchanges: Studies in dialogue interpreting (pp. 71–85). Routledge.

Yaseen, Z. S., & Foster, A. E. (2020). What is empathy? In Z. S. Yaseen & A. E. Foster (Eds.), Teaching empathy in healthcare: Building a new core competency (pp. 3–16). Springer Nature Switzerland. https://doi.org/10.1007/978-3-030-29876-0

7 Annex

7.1 Transcription conventions

The Jeffersonian Transcription Notation includes the following symbols

|

Symbol |

Name |

Use |

|

[ text ] |

Brackets |

Indicates the start and end points of overlapping speech |

|

(seconds) |

Timed pause |

A number in parenthesis indicates the time, in seconds, of a pause in speech |

|

(.) |

Micropause |

A brief pause, usually less than 0.2 seconds |

|

::: |

Colon |

Indicates prolongation of an utterance |

7.2 Levels of empathic responses in ECCS coding system

The ECCS coding system identifies several levels of empathic responses that doctors can utter in response to patient’s EOs (Bylund & Makoul, 2002, 2005).

|

Level |

Name |

Description |

|

6 |

Shared feeling or experience |

Physician self-discloses, making an explicit statement that he or she either shares the patient’s emotion or has had a similar experience, challenge, or progress. |

|

5 |

Confirmation |

Physician conveys to the patient that the expressed emotion, progress, or challenge is legitimate. |

|

4 |

Pursuit |

Physician explicitly acknowledges the central issue in the empathic opportunity and pursues the topic with the patient by asking the patient a question, offering advice or support, or elaborating on a point the patient has raised. |

|

3 |

Acknowledgment |

Physician explicitly acknowledges the central issue in the empathic opportunity but does not pursue the topic. |

|

2 |

Implicit recognition |

Physician does not explicitly recognize the central issue in the empathic opportunity but focuses on a peripheral aspect of the statement and changes the topic. |

|

1 |

Perfunctory recognition |

Physician gives an automatic, scripted-type response, giving the empathic opportunity minimal recognition. |

|

0 |

Denial/ disconfirmation |

Physician either ignores the patient’s empathic opportunity or makes a disconfirming statement. |